Archive

Simply Notice…

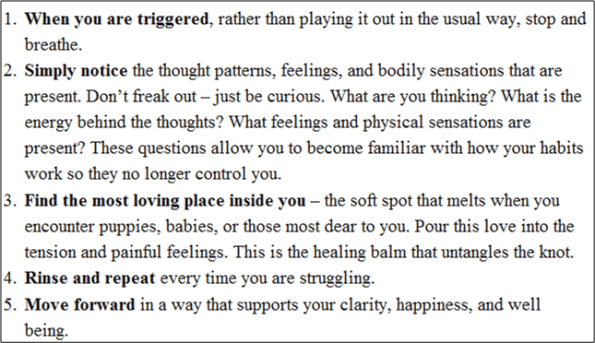

I’ve been following Leo Babauta, the creator of ZenHabits.net, for several years. Check out his inspirational story of personal transformation here: “About Leo“. In a recent Zen Habits newsletter, guest poster Gail Brenner suggested the following minimalist guide to inner peace:

This list recently came to mind while I was reading some complicated and messy library code in order to understand how to incorporate its functionality into my own code mess. Surprise! The source code was too dense and it required a deeper mental stack than the shallow one in my head, so I did what I usually do in those situations – I started getting pissed off. D’oh! Then, out of nowhere, step #2 in Gail’s list came to mind. I won’t answer the questions posed in the step, but you can imagine that they weren’t positive.

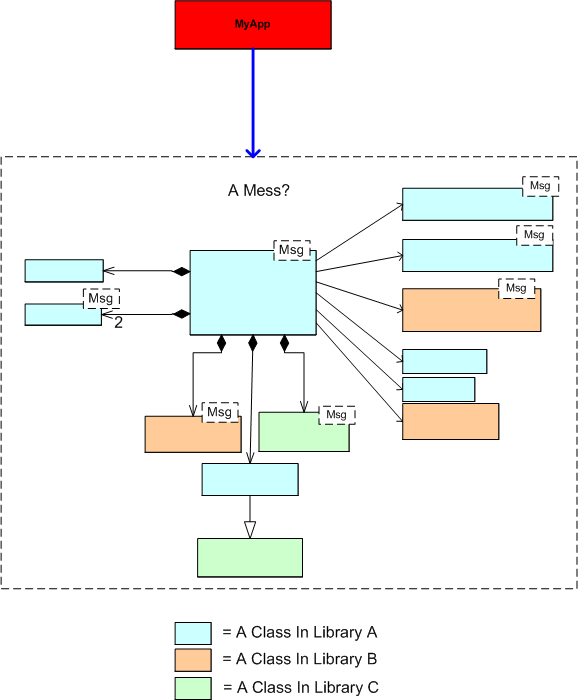

So, what did I do next? I briefly looked at step 3 and then I careened off course. I went out to stalk the library code author with the intent of ripping him/her a new one. Hah, just joking! What I really did was this. I stepped back from the hundreds of lines of source code and I reverse engineered a simpler, abstracted class diagram of the mess. By distancing myself from the code and using the more abstract class diagram to understand the code, I came to the same conclusion – it was a freakin’ mess. D’oh!

Are you wondering what my next step was? I ain’t tellin’.

Start Big?

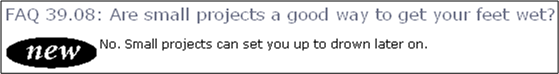

While browsing through the “C++ FAQs“, this particular FAQ caught my eye:

The authors’ “No” answer was rather surprising to me at first because I had previously thought the answer was an obvious “Yes“. However, the rationale behind their collective “No” was compelling. Rather than butcher and fragment their answer with a cut and paste summary, I present their elegant and lucid prose as is:

Small projects, whose intellectual content can be understood by one intelligent person, build exactly the wrong skills and attitudes for success on large projects…..The experience of the industry has been that small projects succeed most often when there are a few highly intelligent people involved who use a minimum of process and are willing to rip things apart and start over when a design flaw is discovered. A small program can be desk-checked by senior people to discover many of the errors, and static type checking and const correctness on a small project can be more grief than they are worth. Bad inheritance can be fixed in many ways, including changing all the code that relied on the base class to reflect the new derived class. Breaking interfaces is not the end of the world because there aren’t that many interconnections to start with. Finally, source code control systems and formalized build procedures can slow down progress.

On the other hand, big projects require more people, which implies that the average skill level will be lower because there are only so many geniuses to start with, and they usually don’t get along with each other that well, anyway. Since the volume of code is too large for any one person to comprehend, it is imperative that processes be used to formalize and communicate the work effort and that the project be decomposed into manageable chunks. Big programs need automated help to catch programming errors, and this is where the payback for static type checking and const correctness can be significant. There is usually so much code based on the promises of base classes that there is no alternative to following proper inheritance for all the derived classes; the cost of changing everything that relied on the base class promises could be prohibitive. Breaking an interface is a major undertaking, because there are so many possible ripple effects. Source code control systems and formalized build processes are necessary to avoid the confusion that arises otherwise.

So the issue is not just that big projects are different. The approaches and attitudes to small and large projects are so diametrically opposed that success with small projects breeds habits that do not scale and can lead to failure of large projects.

After reading this, I initially changed my previously un-investigated opinion. However, upon further reflection, a queasy feeling arose in my stomach because the implication of the authors is that the code bases on big projects aren’t as messy and undisciplined as smaller projects. Plus, it seems as though they imply that disciplined use of processes and tools have a strong correlation with a clean code base and that developers, knowing that the system will be large, will somehow change their behavior. My intuition and personal experience tell me that this may not be true, especially for large code bases that have been around for a long time and have been heavily hacked by lots of programmers (both novice and expert) under schedule pressure.

Small projects may set you up to drown later on, but big projects may start to drown you immediately. What are your thoughts, start small or big?

Static Vs Auto Performance

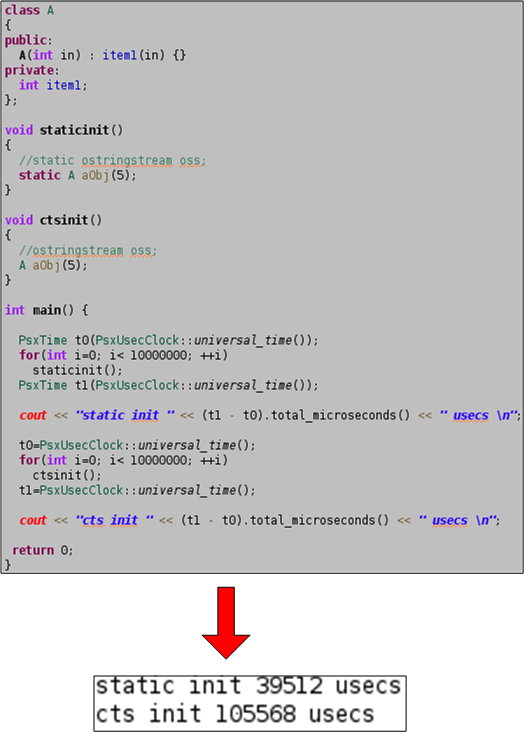

Assume that you’re writing a function that’s called every 5 milliseconds (200 Hz) in a real-time application and that thread-safety for this function is not a factor (it will be called only within one thread of control). Next, assume that you need to use one or more temporary objects to implement the logic in your function. Should you instantiate your objects as static or as auto on the stack?

Unless I’ve made a mental mistake, the source code and results below confirm that using static objects is much more efficient that using auto objects. The code measures the time it takes to instantiate 10 million static and auto objects of type std::ostringstream. The huge performance difference makes sense since the static object is only constructed during the first function call while the auto object is instantiated 10 million times.

It’s likely that std::ostringstream objects are large, but what about small, simple user types? The code below attempts to answer the question by showing that the performance difference between static and auto is still substantial for a trivial object like an instance of class A. I’ve been using this technique for years, but I’ve never quantitatively investigated the static vs auto performance difference until now.

I was motivated to perform this experiment after reading the splendid “Efficient C++“. In the book, authors Bulka and Mayhew show the results of little experiments like these for various C++ programming techniques and idioms. Unlike most classic C++ books, which sprinkle comments and insights about performance tradeoffs throughout the text, the entire book is dedicated to the topic. I highly recommend it for fledgling and intermediate C++ programmers.

We’ve All Had Those Days

OMG! Last night, when I was sittin’ near the fire in my dinner jacket, sippin’ my glass of cognac, smokin’ my pipe, pettin’ my dog, and intently reading Sutter & Alexandrescu’s classic “C++ Coding Standards: 101 Rules, Guidelines, and Best Practices“, I came across this curious passage:

Can you remember a time when you wrote code that used the standard library (for example) and got mysterious and incomprehensible compiler errors? And you kept slightly rearranging your code and recompiling, and rearranging some more and compiling some more, until the mysterious compile errors went away, and then you happily continued on—with at best a faint nagging curiosity about why the compiler didn’t like the only-ever-so-slightly different arrangement of the code you wrote at first? We’ve all had those days…

What on earth are they talking about? That’s never happened to me. What about you?

Measuring Clock Resolution

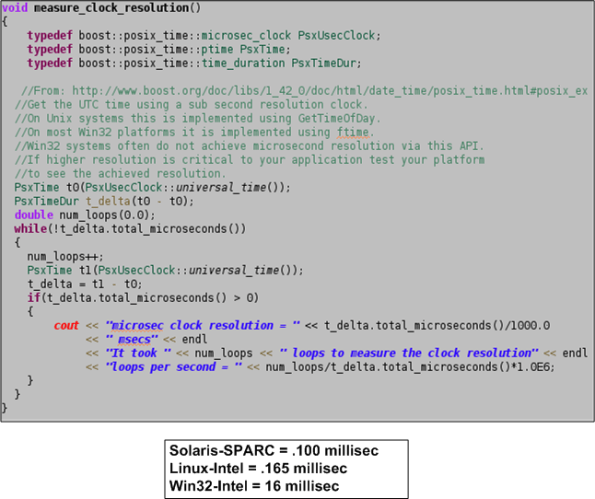

The Boost.Date_Time C++ library provides an excellent, platform-independent set of interrelated classes for measuring and tracking times and dates during program operation. It is much more capable and, more importantly, accurate than the standard C++ <ctime> library inherited from C. Since we need to benchmark the average and peak latency for our growing distributed, real-time, system infrastructure running on Linux, Solaris and (maybe) Win32 platforms, I decided to use the Boost.Date_Time functionality to measure the clock resolution on a representative of each platform.

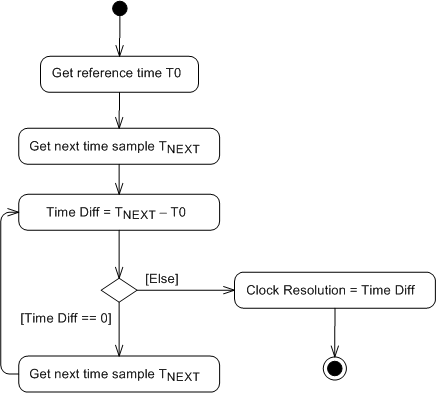

The UML activity diagram below shows the simple algorithm that I used to write a small program that estimates the clock resolution of any compiler-CPU-OS platform combo that Boost.Date_Time is available for. The assumption underlying the design is that the program instructions inside the loop execute an order of magnitude faster than a clock tick increments. At CPU speeds on the order of GHz ( nanoseconds) and clock periods of microseconds, this is a pretty decent assumption, no? The algorithm simply spins around in a tight, high speed loop waiting for the clock to change value relative to an initial reference sample. Note that measuring hardware clock accuracy is another story (Does anyone know if clock hardware accuracy can even be estimated in software?).

The function below shows the super secret, proprietary, source code that uses the Boost.Date_Time facilities to implement the clock resolution estimation algorithm. Note that the boost microseconds clock, as opposed to the nanoseconds or seconds clock, is used to grab time samples. The seconds clock is too coarse grained for our needs and typical off-the-shelf servers do not provide hardware clocks with nanosecond resolutions without add on circuitry. The box below the code shows the results that I obtained for three platforms on which I ran the program. Of course, the results aren’t perfect (are any results ever perfect?), but since the Solaris and Linux results provide sub-millisecond resolution and we expect end-to-end system latencies on the order of hundreds of milliseconds, the clocks will satisfy our latency measurement needs. Of course, the Win32 result is crappy. Got any thoughts?

An Epic Heavyweight Battle

In this corner, we have Bjarne “No Yarn” Stroustrup, the father of C++. In the other corner, we have James “The Goose” Gosling, the father of Java. Ding, ding…… let’s get ready to ruuuumble!

“No Yarn” comes outs swinging and throws the first haymaker:

Well, the Java designers—and probably the Java marketers even more so—emphasized OO to the point where it became absurd. When Java first appeared, claiming purity and simplicity, I predicted that if it succeeded Java would grow significantly in size and complexity. It did. For example, using casts to convert from Object when getting a value out of a container (e.g., (Apple)c.get(i)) is an absurd consequence of not being able to state what type the objects in the container is supposed have. It’s verbose and inefficient. Now Java has generics, so it’s just a bit slow. Other examples of increased language complexity (helping the programmer) are enumerations, reflection, and inner classes. The simple fact is that complexity will emerge somewhere, if not in the language definition, then in thousands of applications and libraries. Similarly, Java’s obsession with putting every algorithm (operation) into a class leads to absurdities like classes with no data consisting exclusively of static functions. There are reasons why math uses f(x) and f(x,y) rather than x.f(), x.f(y), and (x,y).f()—the latter is an attempt to express the idea of a “truly object-oriented method” of two arguments and to avoid the inherent asymmetry of x.f(y).

In an agile counter move, “The Goose” launches a monstrous left hook:

These days we’re beating the really good C and C++ compilers pretty much always. When you go to the dynamic compiler, you get two advantages when the compiler’s running right at the last moment. One is you know exactly what chipset you’re running on. So many times when people are compiling a piece of C code, they have to compile it to run on kind of the generic x86 architecture. Almost none of the binaries you get are particularly well tuned for any of them. When HotSpot runs, it knows exactly what chipset you’re running on. It knows exactly how the cache works. It knows exactly how the memory hierarchy works. It knows exactly how all the pipeline interlocks work in the CPU. It knows what instruction set extensions this chip has got. It optimizes for precisely what machine you’re on. Then the other half of it is that it actually sees the application as it’s running. It’s able to have statistics that know which things are important. It’s able to inline things that a C compiler could never do. The kind of stuff that gets inlined in the Java world is pretty amazing. Then you tack onto that the way the storage management works with the modern garbage collectors. With a modern garbage collector, storage allocation is extremely fast.

On the age old debate about naked pointers, “The Goose” unleashes a crippling blow to the left kidney:

Pointers in C++ are a disaster. They are just an invitation to errors. It’s not so much the implementation of pointers directly, but it’s the fact that you have to manually take care of garbage, and most importantly that you can cast between pointers and integers—and the way many APIs are set up, you have to!

Enraged, “No Yarn” returns the favor with a rapid jab, jab, jab, uppercut combo:

Well, of course Java has pointers. In fact, just about everything in Java is implicitly a pointer. They just call them references. There are advantages to having pointers implicit as well as disadvantages. Separately, there are advantages to having true local objects (as in C++) as well as disadvantages. C++’s choice to support stack-allocated local variables and true member variables of every type gives nice uniform semantics, supports the notion of value semantics well, gives compact layout and minimal access costs, and is the basis for C++’s support for general resource management. That’s major, and Java’s pervasive and implicit use of pointers (aka references) closes the door to all that.

The “dark side” of having pointers (and C-style arrays) is of course the potential for misuse: buffer overruns, pointers into deleted memory, uninitialized pointers, etc. However, in well-written C++ that is not a major problem. You simply don’t get those problems with pointers and arrays used within abstractions (such as vector, string, map, etc.). Scoped resource management takes care of most needs; smart pointers and specialized handles can be used to deal with most of the rest. People whose experience is primarily C or old-style C++ find this hard to believe, but scope-based resource management is an immensely powerful tool and user-defined types with suitable operations can address classical problems with less code than the old insecure hacks.

Stunned silly, “The Goose” steps back, regroups, and charges back into the fray:

One of the most problematic (situations) over the years in C++ has been multithreading. Multithreading is very tightly designed into the code of Java and the consequence is that Java can deal with multicore machines very, very well.

“The Yarn” stands his ground and attempts to weather the ferocious onslaught:

The very first C++ library (really the very first C with classes) library, provided a lightweight form of concurrency and over the years, hundreds of libraries and frameworks for concurrent, parallel, and distributed computing have been built in C++. C++0x will provide a set of facilities and guarantees that saves programmers from the lowest-level details by providing a “contract” between machine architects and compiler writers—a “machine model.” It will also provide a threads library providing a basic mapping of code to processors. On this basis, other models can be provided by libraries. I would have liked to see some simpler-to-use, higher-level concurrency models supported in the C++0x standard library, but that now appears unlikely. Later—hopefully, soon after C++0x—we will get more libraries specified in a technical report: thread pools and futures, and a library for I/O streams over wide area networks (e.g., TCP/IP). These libraries exist, but not everyone considers them well enough specified for the standard.

And the winner is………?

So, do you think that I’ve served well as an impartial referee for this epic heavyweight battle? Hell, I hope not. I’m strongly biased toward C++ because it’s taken me years of study and diligent practice to become an intermediate-to-advanced C++ programmer (but still well below the skill level of the masters). I think that most Java programmer’s who religiously trash C++ do so out of fear of: its breadth+depth, its suitability for application at all layers of the stack, and the option to “get dangerously close to the machine“. On the other hand, C++ programmers who trash Java do so out of a sense of elitism and a disdain for object oriented purity.

Note: The snippets in this blarticle were copied and pasted from the delightful and engrossing “Masterminds Of Programming“. The book’s author, Federico Biancuzzi, not only picked the best possible people to interview, his questions were insightful and deeply thought provoking.

Implicit Type Conversion

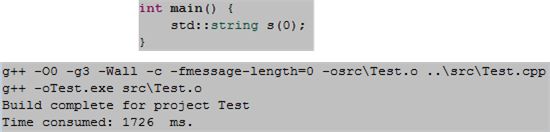

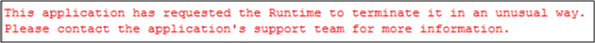

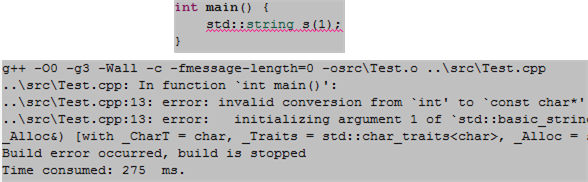

Check out this bit of C++ code and its unexpected, successful compile:

Next, observe the runtime result from the Win Visual Studio 8.0 IDE:

Thirdly, check out this bit of C++ code and its expected, unsuccessful compile:

What’s up wit’ dat? The reason I brought this up is because we’ve discovered that we have this type of bug in our growing 5 figure code base. However, we haven’t been able to locate and kill it – yet.

Note: After being enlightened by a kind and sharing member of a linkedIn.com C++ group, I was told the reason for the successful compile. Since one of the constructors of the base class of std::string takes a char*, the 0 in the s(0) definition is implicitly converted from int(o) to char*(0); the null pointer. In the non-zero case, the compiler rejects the attempt to convert int(1) into char*(1). As this example shows, implicit type conversion, a necessary C++ language feature needed to remain backwardly compatible with C, can be a tricky and subtle source of bugs.

Note: After being enlightened by a kind and sharing member of a linkedIn.com C++ group, I was told the reason for the successful compile. Since one of the constructors of the base class of std::string takes a char*, the 0 in the s(0) definition is implicitly converted from int(o) to char*(0); the null pointer. In the non-zero case, the compiler rejects the attempt to convert int(1) into char*(1). As this example shows, implicit type conversion, a necessary C++ language feature needed to remain backwardly compatible with C, can be a tricky and subtle source of bugs.

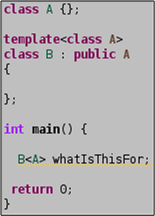

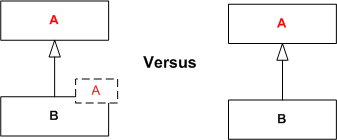

Watch Out For Multipletons

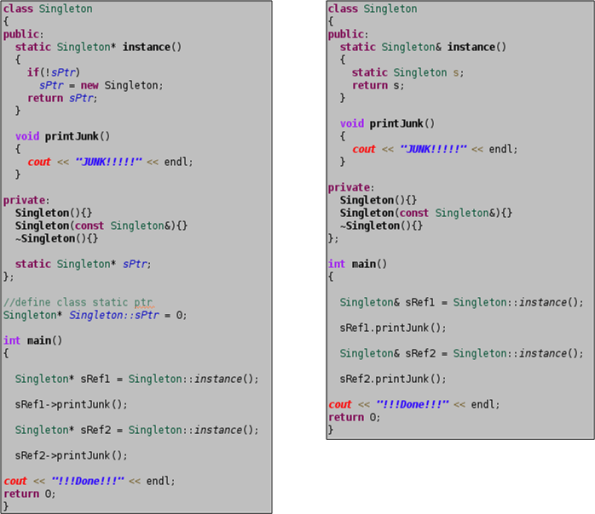

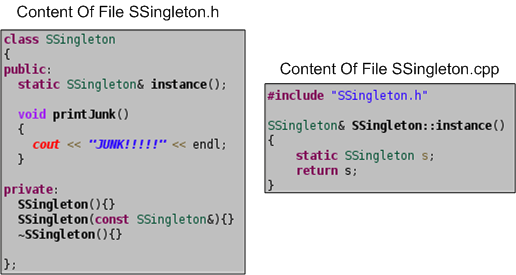

Because C++ has both pointers and references, there are two ways to implement a singleton. The programs below show the difference between the approaches (I know, I know. I could’ve used a smart pointer on the left). Since references can’t be “accidently” deleted by client code, I prefer the reference method on the right. Plus, there’s no need for an “if” test every time the singleton’s handle is retrieved by client code.

When using a singleton, don’t forget to put the definition of the “instance()” member function (or the pointer definition) into a separate .cpp implementation file, and not as an inline in the .h file. Otherwise, each .cpp file in your program that needs to access the singleton will get it’s own personal copy of a singleton, transforming it into a “multipleton”. You’ll get a program that compiles but has a subtle, hard to detect runtime bug that won’t crash the program.

And yes, we found this out the hard way. 🙂

Post-picture insertion notes: 1) I shoulda named the variables in the main() of the pointer version of the singleton sPtr1 and sPtr2. 2). For consistency, I prolly shoulda made the copy assignment operator function private in both implementations. Is there anything else I could do to improve the code?

Accessing Configuration Data

Assume that on initialization, your C++ application reads in a bunch of configuration parameters from secondary storage prior to commencing its runtime mission. Via a trio of simple UML class diagrams, the figure below shows three ways (patterns?) to structure your application to read in and store configuration data for subsequent use by the program‘s productive, value added classes (user1, user2, etc).

In the first design, a singleton class is used to read and store the runtime configuration in RAM. The singleton can either be auto-instantiated at program load time in global/namespace memory before main() executes, or the first time it is accessed by one of the program’s user objects.

In the second approach, you employ a “ConfigOwner” class that encapsulates and owns the configuration data reader class (AppConfig). On program startup, the “ConfigOwner” object instantiates the “AppConfig” reader object and then subsequently passes a reference/pointer to it to all the user objects (which the “ConfigOwner” object doesn’t own).

In the third strategy, a higher level object (AppEncapsulator) owns all other objects in the application. On startup, this parent class is instantiated first. It fully controls the initialization sequence, creating the “AppConfig” object first and then pushing a reference/pointer to it down to its child User class objects when it subsequently constructs them.

Since the application startup sequence is centrally controlled, the third, parent-child approach is totally thread-safe. The implementation of the singleton approach, where the singleton is instantiated at program load time before main() starts executing, is also thread safe. The instantiation-on-first-access singleton approach and the ConfigOwner approach are subtlely thread unsafe without some clever synchronization coding added to ensure that the AppConfig object is fully constructed before it is accessed by its users.

Since I’m not fond of using singletons in situations where they’re not absolutely required (and this is arguably one of those cases, no?) and I disdain “clever” coding, I prefer the last, centrally controlled strategy. How about you? Are you clever, or a simpleton like me?

Note: Don’t mind the bracket turd on the right of the diagram. Since I’m a lazy ass and I try not to be a perfectionist when perfection is not needed, I left the pooper in rather than removing, fixing, and reinserting the graphic into the post. Too much work.