Archive

A Software-Defined World?

When all is said and done, more is said then done. – Unknown

Savvy politicians and bureaucrats seem to always say the right thing, but they rarely back up their proclamations with effective action. In “Military’s focus on big systems is now killing us”, DARPA Director Arati Prabhaker states the patently obvious:

The Pentagon must break this monolithic, high-cost, slow-moving, inflexible approach that we have.

Well, duh! I’ve been hearing this rally cry from incoming and outgoing appointees for decades.

Yet another insightful DARPA director states:

The services have largely failed to take advantage of an emerging “software-defined world.” The result has been skyrocketing weapons costs.

Say what? “Sofware-Defined World“? I must have missed the debut of this newly minted jargon. The “Internet Of Things” and its pithy acronym, IoT, must be so yesterday. The “Software-Defined World“, SDW, must be so today. W00t!!

If you read the article carefully, you’ll see that the interviewees have no clue on how to solve the grandiose cost/schedule/quality problems posed by the currently entrenched, docu-centric, waterfall (SRR, PDR, CDR, Fab, Test, Deploy) acquisition process and, especially, the hordes of civil servants whose livelihoods depend on the acquisition system remaining “as is“. But BD00 does know how to solve it: Certified Scrum Master and Certified Product Owner training for all!

A Skeptical “No”

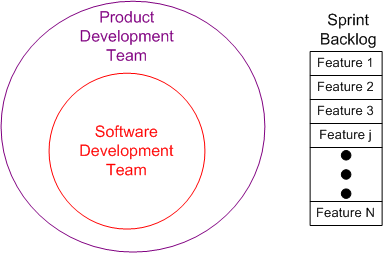

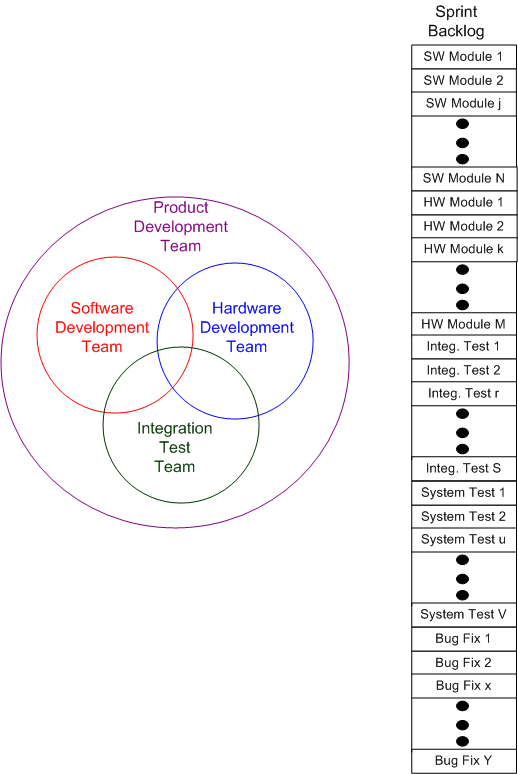

Just about every agile video, book, and article I’ve ever consumed assumes some variant of the underlying team model shown below. The product these types of teams build is comprised of custom software running on off-the-shelf server hardware. Even though it’s not shown, they also assume a client-server structure, request-response protocol, database-centric system.

The team model for the types of systems I work on is given below. They are distributed real-time event systems comprised of embedded, heterogeneous, peer processors glued together via a pub-sub protocol. By necessity, specialized, multi-disciplinary teams are required to develop this category of systems. Also, by necessity, the content of the sprint backlog is more complex and intricately subtler than the typical agile IT product backlog of “features” and “user stories“.

When I watch/read/listen to smug agile process experts and coaches expound the virtues of their favorite methodology using narrow, anecdotal, personal stories from the database-centric IT world, I continuously ask myself “can this apply to the type of products I help build?“. Often, the answer is a skeptical “no“. Not always, but often.

Thanks, But No Thanks

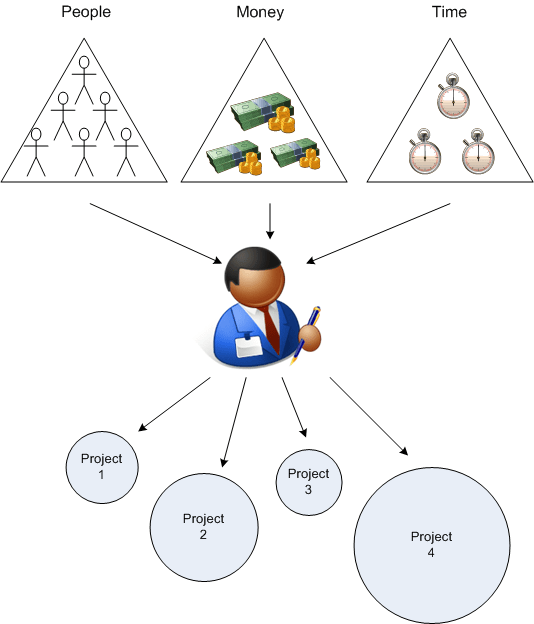

When it comes down to it, the primary function of management is to allocate finite org resources to efforts that will subsequently increase revenues and/or decrease costs. By resources, I mean people and money (for salaries, materials, tools, training, etc) and time.

Since projects vary wildly in complexity/importance/size, required skill-sets, and they (should) have end points, correctly allocating resources to, and amongst, projects is a huge challenge. Both over and under allocation of resources can threaten the financial viability of the org and, thus, everyone within it. Under-allocation can lead to a stagnant product/service portfolio and over-allocation can lead to an expensive product/service portfolio. Note that either under or over allocation can produce individual project failures.

To correctly allocate resources to projects, especially the “human resources“, some things must be semi-known about them. Besides desired outcomes and required execution skills, project parameters like size and/or complexity should be estimated to some level of granularity. That’s why, for the most part, I think the #noestimates and #noprojects people are full of bullocks. Like agile coaches, they mean well and their Utopian ideas sound enticing. Thanks, but no thanks.

Result-Focused, Or State-Focused?

As I continue to explore/evaluate the relevance of the SEMAT Kernel to the future of software engineering, I’m finding that I’m liking it less and less. (For a quick introduction to the SEMAT Kernel, please go read this post, “Revolution, Or Malarkey?“, and then return back here if you’re still interested in what BD00 has to say.)

One of the principal creators of the SEMAT movement, Ivar Jacobson, subjectively asserts that:

“…using the SEMAT kernel to drive team behavior makes the team result focused instead of document driven or activity centric.” – Ivar Jacobson

Using the patented BD00 method of distorted analysis, let’s explore this bold proclamation further.

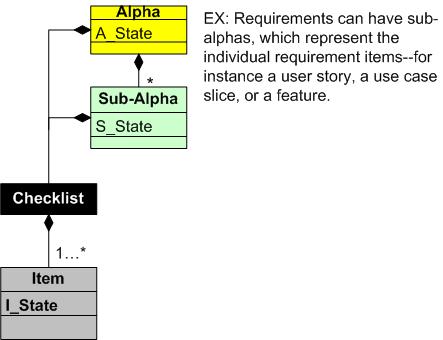

In the current definition of the SEMAT kernel, each of the seven top-level alphas in the SEMAT Kernel has a state whose value at any given moment is determined by the sub-states of a set of criteria items in a checklist. In addition, each sub-alpha itself has a checklist-determined state.

“Each state has a checklist that specifies the criteria needed to reach the state. It is these states that make the kernel actionable and enable it to guide the behavior of software development teams.” – “The Essence Of Software Engineering“

So, let’s look at some numbers for a small, hypothetical, SEMAT-based project. Assume the following definition of our project:

- 7 Alphas

- Each alpha has 3 Sub-Alphas

- Each checklist has 5 Items

With these metrics characterizing our project, we need to continuously track/update:

- 7 alpha states

- 7 * 3 = 21 Sub-alpha states

- 7 * 3 * 5 = 105 checklist item states

Man, that’s a lotto states for our relatively small, 21 sub-alpha project, no? It seems like the SEMAT team could be spending a lot of time in a state of confusion updating the checklist document(s) that dynamically track the state values. Thus,

“…using the SEMAT kernel to drive team behavior makes the team state focused instead of result focused.” – BD00

Unless result == state, Ivar may be mistaken.

The Last Retrospective

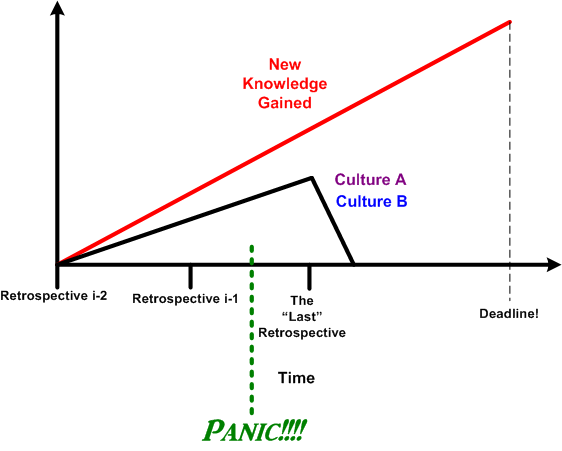

In an ongoing agile endeavor, the practice for eliciting and applying freshly learned knowledge going forward is the periodic “retrospective” (aka periodic post-mortem to the “traditional” old fogeys). Theoretically, retrospectives are temporary way points where individuals: stop, step back, cerebrally inspect what they’ve accomplished and how they’ve accomplished it, share their learning experiences, suggest new process/product improvements, and evaluate previously implemented improvements.

As the figure below depicts, the fraction of newly acquired knowledge applied going forward is a function of group culture. In macho, command and control hierarchies like culture B, the application of lessons learned is suppressed relative to more flexible cultures like A due to the hierarchical importance of opinions.

Regardless of culture type, during schedule-challenged projects with a fixed, do-or-don’t-get-paid deadline (yes, those projects do indeed still exist), this may happen:

When the elites upstairs magically determine that a panic point has appeared (sometimes seemingly out of nowhere): retrospectives get jettisoned, the daily standup morphs into the daily inquisition, corner-cutting “practices” become best practices, and the application of newly acquired knowledge stops cold. Humans being humans, learning still naturally occurs and new knowledge is accrued. However, it is not likely the type of knowledge that will help on future projects.

Don’t Be Fooled

Check out the hypothetical agile burndown and EVM (Earned Value Management) charts below. Like in the “real” world, the example project (or sprint, if you prefer) ended up being underestimated. The shortfall is indicated by the dotted line on the right.

When we literally flip the agile burndown chart in the vertical dimension, we get this:

The moral of the story is: “Don’t be fooled by the agilista herd; an agile burndown chart is nothing but an inverted version of the despised EVM chart.“

Formal Waterfall Events

If ur customer *requires* formal waterfall events like “Sys Reqs Review”, “Prelim Design Review”, “Critical Design Review”, gotta do them.

— Tony DaSilva (@Bulldozer0) March 30, 2014

The customers of all the big government-financed sensor system programs I’ve ever worked on have required the aforementioned, waterfall, dog-and-pony shows as part of their well-entrenched acquisition process. Even prior to commencing a waterfall death march, as part of the pre-win bidding process, customers also (still) require contractors to provide detailed schedule and cost commitments in their proposal submissions – right down to the CSCI level of granularity.

If you think it’s tough to get your internal executive customers to wholeheartedly embrace an “agile adoption” or “no estimates” initiative, try to wrap your mind around the cosmic difficulty of doing the same to a large, fragmented, distributed authority, external acquisition machine whose cogs are fine-tuned to: cover their ass, defend their turf, and doggedly fight to keep the extant process that justifies their worth in place. Good luck with that.

Trivial Trivia

I was going through some old project stuff and stumbled upon the chart below. I developed it back when I was the software lead of a nine person sub-team on an embedded system product development effort:

Putting all those indecipherable acronyms adorning the chart aside, note that the project was performed in 2004 – a mere 3 years after the famous “Agile Manifesto” was hatched. I can’t remember if I knew about (or read) the manifesto at the time, but I do know that Tom Gilb’s “Evo“ and Barry Boehm’s “Spiral“ processes had radically changed my worldview of software development. Specifically, the (now-obvious) concept of incremental development and delivery rang my bell as the best way to mitigate risk on challenging, software-intensive, projects.

As the chart illustrates, the actual hand-off of each of the seven builds (to the system integration test team) was pretty much dead nuts right on target. Despite the fact that the project front end (requirements definition and software design) was managed as a “waterfall” endeavor, the targets were met. Thus, I’m led to believe the following trivial trivia:

Not all agile projects succeed and not all waterfall projects fail.

Dueling Quagmires

To BD00, the agile movement, even though it is a refreshing backlash against the “Process Models And Standards Quagmire” (PMASQ) perpetrated by a well-meaning but clueless mix of government and academic borgs who don’t have to create and build anything, has spawned its own quagmire of “Agile Process Frameworks And Practices Quagmire” (APFAPQ). Like the PMASQ community has ignited a cottage industry of expensive consultants, certifiers, assessors, trainers, and auditors, the APFAPQ movement has jump-started an equivalent community of expensive consultants, coaches, trainers, certifiers.

Government Governance

The figure below highlights one problem with government “governance” of big software systems development. Sure, it’s dated, but it drives home the point that there’s a standards quagmire out there, no?

Imagine that you’re a government contractor and, for every system development project you “win“, you’re required to secure “approval” from a different subset of authorities in a quagmire standards “system” like the one above. Just think of the overhead cost needed to keep abreast of, to figure out which, and to comply with, the applicable standards your product must conform to. Also think of the cost to periodically get your company and/or its products assessed and/or certified. If you ever wondered why the government pays $1000 for a toilet seat, look no further.

I look at this random, fragmented standards diagram as a paranoid, cover-your-ass strategy that government agencies can (and do) whip out when big systems programs go awry: “The reason this program is in trouble is because standards XXX and YYY were not followed“. As if meeting a set of standards guarantees robust, reliable, high-performing systems. What a waste. But hey, it’s other people’s money (yours and mine), so no problemo.