Archive

Measuring Clock Resolution

The Boost.Date_Time C++ library provides an excellent, platform-independent set of interrelated classes for measuring and tracking times and dates during program operation. It is much more capable and, more importantly, accurate than the standard C++ <ctime> library inherited from C. Since we need to benchmark the average and peak latency for our growing distributed, real-time, system infrastructure running on Linux, Solaris and (maybe) Win32 platforms, I decided to use the Boost.Date_Time functionality to measure the clock resolution on a representative of each platform.

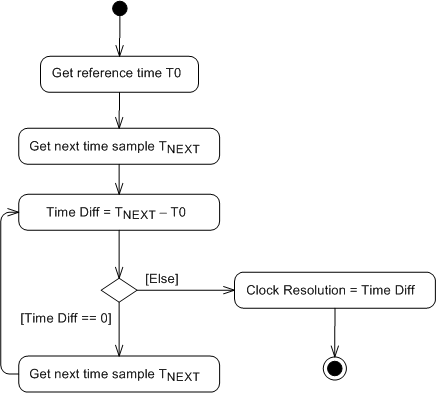

The UML activity diagram below shows the simple algorithm that I used to write a small program that estimates the clock resolution of any compiler-CPU-OS platform combo that Boost.Date_Time is available for. The assumption underlying the design is that the program instructions inside the loop execute an order of magnitude faster than a clock tick increments. At CPU speeds on the order of GHz ( nanoseconds) and clock periods of microseconds, this is a pretty decent assumption, no? The algorithm simply spins around in a tight, high speed loop waiting for the clock to change value relative to an initial reference sample. Note that measuring hardware clock accuracy is another story (Does anyone know if clock hardware accuracy can even be estimated in software?).

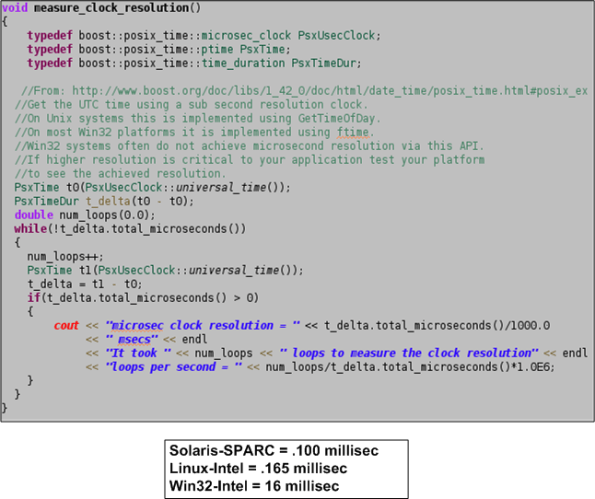

The function below shows the super secret, proprietary, source code that uses the Boost.Date_Time facilities to implement the clock resolution estimation algorithm. Note that the boost microseconds clock, as opposed to the nanoseconds or seconds clock, is used to grab time samples. The seconds clock is too coarse grained for our needs and typical off-the-shelf servers do not provide hardware clocks with nanosecond resolutions without add on circuitry. The box below the code shows the results that I obtained for three platforms on which I ran the program. Of course, the results aren’t perfect (are any results ever perfect?), but since the Solaris and Linux results provide sub-millisecond resolution and we expect end-to-end system latencies on the order of hundreds of milliseconds, the clocks will satisfy our latency measurement needs. Of course, the Win32 result is crappy. Got any thoughts?