Archive

A Stone Cold Money Loser

A widespread and unquestioned assumption deeply entrenched within the software industry is:

For large, long-lived, computationally-dense, high-performance systems, this attitude is a stone cold money loser. When the original cast of players has been long departed, and with hundreds of thousands (perhaps millions) of lines of code to scour, how cost-effective and time-effective do you think it is to hurl a bunch of overpaid programmers and domain analysts at a logical or numerical bug nestled deeply within your golden goose. What about an infrastructure latency bug? A throughput bug? A fault-intolerance bug? A timing bug?

Everybody knows the answer, but no one, from the penthouse down to the boiler room wants to do anything about it:

To lessen the pain, note that to be “kind” (shhh! Don’t tell anyone) BD00 used the less offensive “artifacts” word – not the hated “D” word. And no, I don’t mean huge piles of detailed, out-of-synch, paper that would torch your state if ignited. And no, I don’t mean sophisticated-looking, semantic-less garbage spit out by domain-agnostic tools “after” the code is written.

Wah, wah, wah:

- “But it’s impossible to keep the artifacts in synch with the code” – No, it’s not.

- “But no one reads artifacts” – Then make the artifacts readable.

- “But no one knows how to write good artifacts” – Then teach them.

- “But no one wants to write artifacts” – So, what’s your point? This is a business, not a vacation resort.

Under Or Over?

In general, humans suck at estimating. In specific, (without historical data,) software engineers suck at estimating both the size and effort to build a product – a double whammy. Thus, the bard is wrong. The real thought worth pondering is:

To underestimate or overestimate, that is the question.

In “Software Estimation: Demystifying the Black Art“, Steve McConnell boldly answers the question with this graph:

As you can see, the fiscal penalty for underestimation rockets out of control much quicker than the penalty for overestimation. In summary, once a project gets into “late” status, project teams engage in numerous activities that they don’t need to engage in if they overestimated the effort: more status meetings with execs, apologies, triaging of requirements, fixing bugs from quick-dirty workarounds implemented under schedule duress, postponing demos, pulling out of trade shows, more casual overtime, etc.

So, now that the under/over question has been settled, what question should follow? How about this scintillating selection:

Why do so many orgs shoot themselves in the foot by perpetuating a culture where underestimation is the norm and disappointing schedule/cost performance reigns supreme?

Of course, BD00 has an answer for it (cuz he’s got an answer for everything):

Via the x-ray power of POSIWID, it’s simply what hierarchical command and control social orgs do; and you can’t ask such an org to be what it ain’t.

Courageous Journey

Here’s a dare fer ya:

If your org has a long, illustrious history of product development and you’re just getting started on a new, grand effort that will conquer the world and catapult you and your clan to fame and fortune, ask around for the post-mortem artifacts documenting those past successes.

If by some divine intervention, you actually do discover a stash of post-mortems stored on the 360 KB, 3.5 inch floppy disk that comprises your org’s persistent memory, your next death-defying task is to secure access to the booty.

If by a second act of gawd you’re allowed to access the “data“, then pour through the gobbledygook and look for any non-bogus recommendations for future improvement that may be useful to your impending disaster, err, I mean, project. Finally, ask around to discover if any vaunted org processes/procedures/practices were changed as a result of the “lessons learned” from innocently made bad decisions, mistakes, and errors.

But wait, you’re not 100% done! If you do survive the suicide mission with your bowels in place and title intact, you must report your findings back here. To celebrate your courageous journey through Jurassic Park, there may be a free BD00 T-shirt in the offing. Making stuff up is unacceptable – BD00 requires verifiable data and three confirmatory references. Only BD00 is “approved” to concoct crap, both literally and visually, on this dumbass and reputation-busting blawg.

The Scrum Sprint Planning Meeting

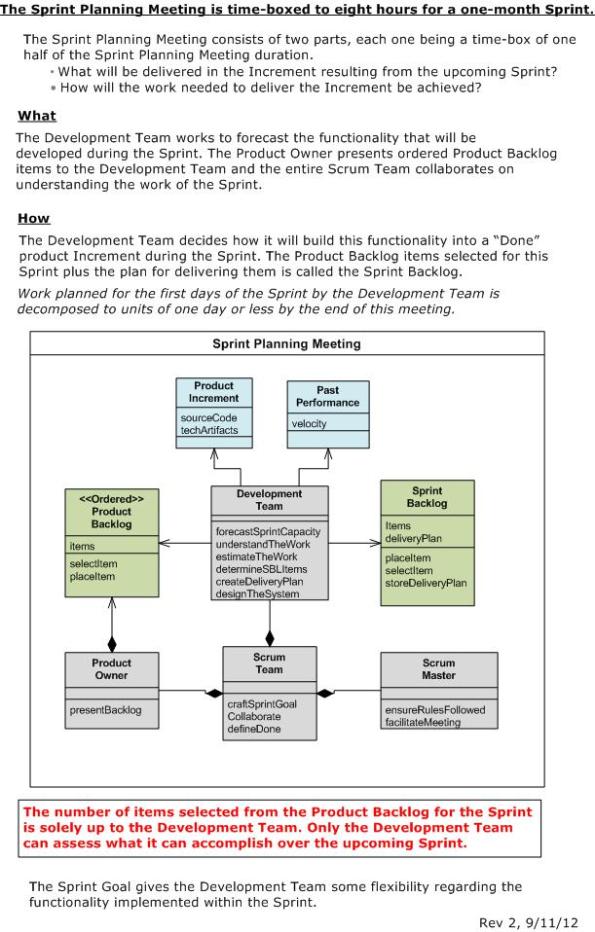

Since the UML Scrum Cheat Sheet post is getting quite a few page views, I decided to conjure up a class diagram that shows the static structure of the Scrum Sprint Planning Meeting (SPM).

The figure below shows some text from the official Scrum Guide. It’s followed by a “bent” UML class diagram that transforms the text into a glorious visual model of the SPM players and their responsibilities.

In unsuccessful Scrum adoptions where a hierarchical command & control mindset is firmly entrenched, I’ll bet that the meeting is a CF (Cluster-f*ck) where nobody fulfills their responsibilities and the alpha dudes dominate the “collaborative” decision making process. Maybe that’s why Ramblin’ Scott Ambler recently tweeted:

Everybody is doing agile these days. Even those that aren’t. – Scott Ambler

D’oh! and WTF! – BD00

Of course, BD00 has no clue what shenanigans take place during unsuccessful agile adoptions. In the interest of keeping those consulting and certification fees rolling in, nobody talks much about those. As Chris Argyris likes to say, they’re “undiscussable“. So, Shhhhhhh!

Bring Back The Old

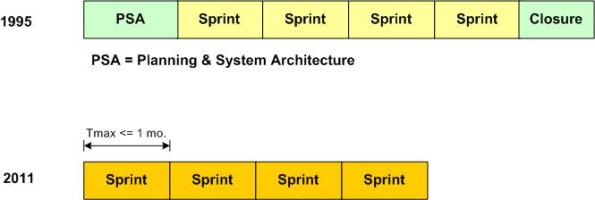

The figure below shows the phases of Scrum as defined in 1995 (“Scrum Development Process“) and 2011 (The 2011 Scrum Guide). By truncating the waterfall-like endpoints, especially the PSA segment, the development framework became less prescriptive with regard to the front and back ends of a new product development effort. Taken literally, there are no front or back ends in Scrum.

The well known Scrum Sprint-Sprint-Sprint-Sprint… work sequence is terrific for maintenance projects where the software architecture and development/build/test infrastructure is already established and “in-place“. However, for brand new developments where costly-to-change upfront architecture and infrastructure decisions must be made before starting to Sprint toward “done” product increments, Scrum no longer provides guidance. Scrum is mum!

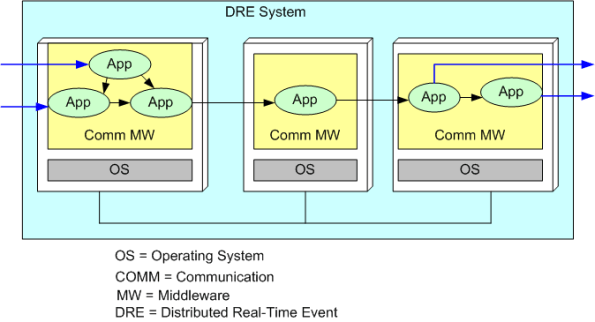

The figure below shows a generic DRE system where raw “samples” continuously stream in from the left and value-added info streams out from the right. In order to minimize the downstream disaster of “we built the system but we discovered that the freakin’ architecture fails the latency and/or thruput tests!“, a bunch of critical “non-functional” decisions and must be made and prototyped/tested before developing and integrating App tier components into a potentially releasable product increment.

I think that the PSA segment in the original definition of Scrum may have been intended to mitigate the downstream “we’re vucked” surprise. Maybe it’s just me, but I think it’s too bad that it was jettisoned from the framework.

The time’s gone, the money’s gone, and the damn thing don’t work!

Don’t Do This!

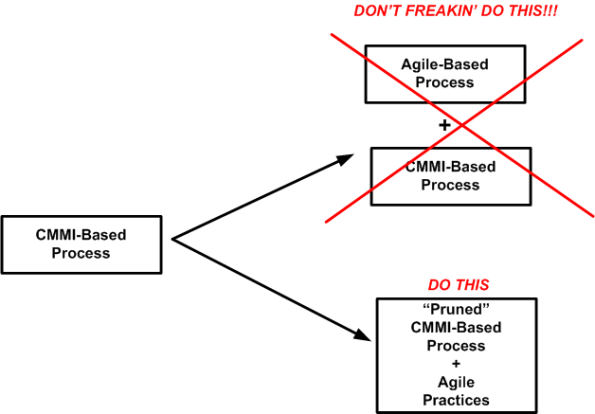

Because of its oxymoronic title, I started reading Paul McMahon’s “Integrating CMMI and Agile Development: Case Studies and Proven Techniques for Faster Performance Improvement“. For CMMI compliant orgs (Level >= 3) that wish to operate with more agility, Paul warns about the “pile it on” syndrome:

So, you say “No org in their right mind would ever do that“. In response, BD00 sez “Orgs don’t have minds“.

Hurry! Hurry!

Lots of smart and sincere software development folks like Ron Jeffries, Jim Coplien, Scott Ambler, Bob Marshall, Adam Yuret, etc. have recently been lamenting the dumbing-down and commercialization of the “agile” brand. Since I get e-mails like the one below on a regular basis, I can deeply relate to their misery.

Hurry! Hurry! After just 2 days of effort and a measly 1300 beaners of “investment“, you’ll be fully prepared to lead your next software development project into the promised land of “under budget, on schedule, exceeds expectations“.

Whoo Hoo! My new SCRUM Master certificate is here! My new SCRUM Master certificate is here!

Marginalizing The Middle

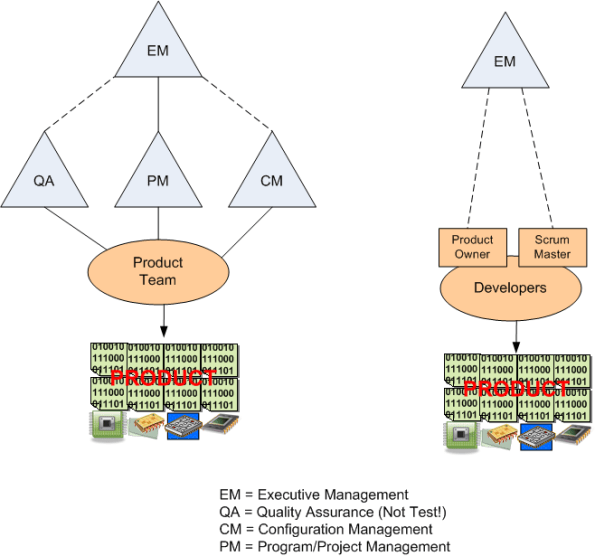

Because they unshackle development teams from heavyweight, risk-averse, plan-drenched, control-obsessed processes promoted by little PWCE Hitlers and they increase the degrees of freedom available to development teams, agile methods and mindsets are clearly appealing to the nerds in the trenches. However, in product domains that require the development of safety-critical, real-time systems composed of custom software AND custom hardware components, the risk of agile failure is much greater than traditional IT system development – from which “agile” was born. Thus, a boatload of questions come to mind and my head starts to hurt when I think of the org-wide social issues associated with attempting to apply agile methods in this foreign context:

Will the Quality Assurance and Configuration Management specialty groups, whose whole identity is invested in approving a myriad of documents through complicated submittal protocols and policing compliance to existing heavyweight policies/processes/procedures become fearful obstructionists because of their reduced importance?

Will penny-watching, untrusting executives who are used to scrutinizing planned-vs-actual schedules and costs in massive Microsoft Project and Excel files via EVM (Earned Value Management) feel a loss of importance and control?

Will rigorously trained, PMI-indoctrinated project managers feel marginalized by new, radically different roles like “Scrum Master“?

Note: I have not read the oxymoronic-titled “Integrating CMMI and Agile Development” book yet. If anyone has, does it address these ever so important, deep seated, social issues? Besides successes, does it present any case studies in failure?

… there is nothing more difficult to carry out, nor more doubtful of success, nor more dangerous to handle, than to initiate a new order of things. For the reformer makes enemies of all those who profit by the old order, and only lukewarm defenders in all those who would profit by the new order… – Niccolo Machiavelli

Citizen CANES

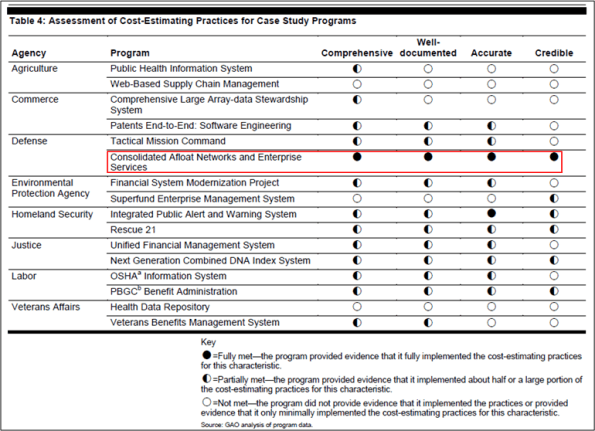

On the same day that the US government General Accountability Office (GAO) released its “Software Development: Effective Practices And Federal Challenges In Applying Agile Methods” report, it also released a report titled “INFORMATION TECHNOLOGY COST ESTIMATION: Agencies Need to Address Significant Weaknesses in Policies and Practices“. In this report, the GAO compared cost estimation policies and procedures to best practices at eight agencies. It also reviewed the documentation supporting cost estimates for 16 major investments at those eight agencies—representing about $51.5 billion of the planned IT spending for fiscal year 2012. The table below summarizes the GAO findings.

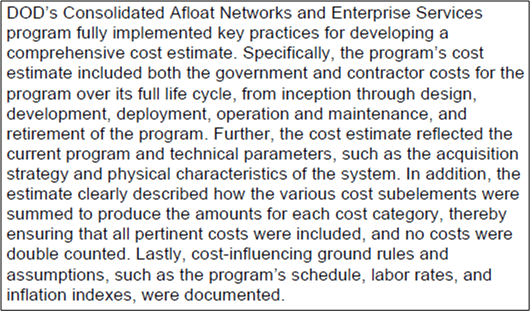

As you can see, only one out of sixteen programs fully met the mighty GAO criteria for “effective” cost estimation: the Navy’s “Consolidated Afloat Networks and Enterprise Services” (CANES) investment. Here’s the GAO’s glowing, bureaucratic-speak assessment of the citizen CANES cost estimation performance as of July 2012:

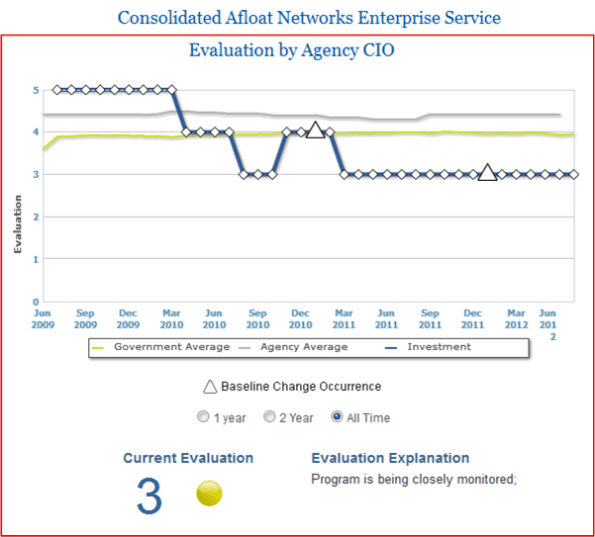

Out of curiosity, I googled the exemplar CANES program. Here’s what I found on the US government’s “IT Dashboard” website:

Note that after debuting with a rating of 5 (Low Risk) in 2009, CANES is currently rated at 3 (medium risk) and is being “closely monitored” by some higher-ups in the infallible chain of command.

Of course, no one, not even that omniscient and omnipresent devil BD00, can tell what will happen to CANES in the future. The point of this post is that spending lots of money and time on meticulous cost estimation to satisfy some authority’s arbitrary and subjective criteria (comprehensive, well-documented, accurate, credible) doesn’t guarantee squat about the future. It does, however, provide a temporary and comfortable illusion of control that “official watchers” crave. We can call it the linus-blanket affect. Maybe coarser and less comprehensive estimation techniques can work just as well or better?

Comprehensiveness is the enemy of comprehensibility – Martin Fowler

Snapback To “Business As Usual”

Over the years, I’ve read quite a few terrific and insightful reports from the General Accountability Office (GAO) on the state of several big, software-intensive, government programs. The GAO is the audit, evaluation, and investigative arm of the US Congress. Its mission is to:

help improve the performance and accountability of the federal government for the American people. The GAO examines the use of public funds; evaluates federal programs and policies; and provides analyses, recommendations, and other assistance to help Congress make informed oversight, policy, and funding decisions.

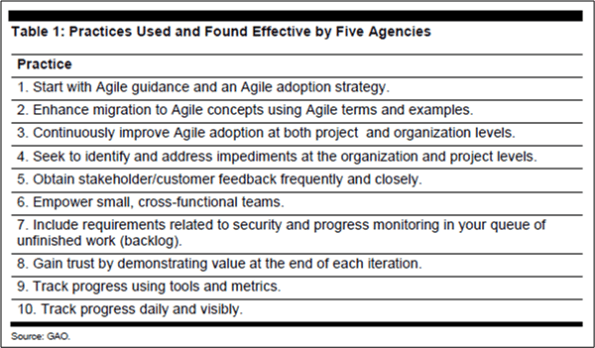

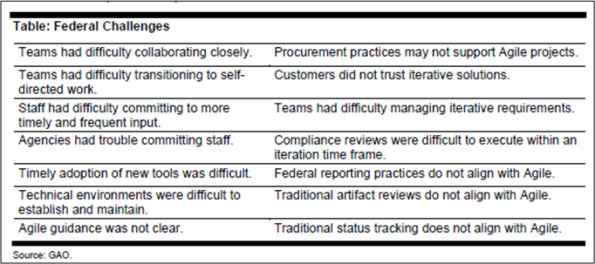

In a newly released report titled “Software Development: Effective Practices And Federal Challenges In Applying Agile Methods“, the GAO communicated the results of a study it performed on the success of using “agile” software methods in five agencies (a.k.a. bureaucracies): the Department of Commerce, Defense, Veterans Affairs, the Internal Revenue Service, and the National Aeronautics and Space Administration.

The GAO report deems these 10 best practices as effective for taking an agile approach:

Yawn. Every time I read a high-falutin’ list like this, I’m hauntingly reminded of what Chris Argyris essentially says:

Most advice given by “gurus” today is so abstract as to be un-actionable.

The GAO report also found more than a dozen challenges with the agile approach for federal agencies:

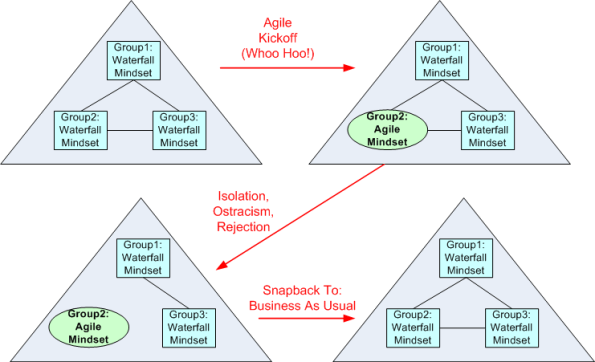

Again, yawn. These are not only federal challenges…. they’re HUGE commercial challenges as well. When the whole borg infrastructure, its policies, its protocols, its (planning, execution, reporting) procedures, and most importantly, its sub-group mindsets are steadfastly waterfall-dominated, here’s what usually happens when “agile” is attempted by a courageous borg sub-group:

D’oh! I hate when that happens.