Archive

Frag City

As the accumulation of knowledge in a disciplinary domain advances, the knowledge base naturally starts fragmenting into sub-disciplines and turf battles break out all over: “my sub-discipline is more reflective of reality than yours, damn it!“.

In a bit of irony, the systems thinking discipline, which is all about holism, has followed the well worn yellow brick road that leads to frag city. For example, compare the disparate structures of two (terrific) books on systems thinking:

Thank Allah there is at least some overlap, but alas, it’s a natural law: with growth, comes a commensurate increase in complexity. Welcome to frag city…

The Hidden Preservation State

In the software industry, “maintenance” (like “system“) is an overloaded and overused catchall word. Nevertheless, in “Object-Oriented Analysis and Design with Applications“, Grady Booch valiantly tries to resolve the open-endedness of the term:

It is maintenance when we correct errors; it is evolution when we respond to changing requirements; it is preservation when we continue to use extraordinary means to keep an ancient and decaying piece of software in operation (lol! – BD00) – Grady Booch et al

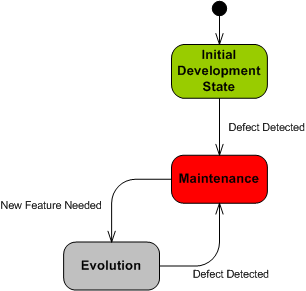

Based on Mr. Booch’s distinctions, the UML state machine diagram below attempts to model the dynamic status of a software-intensive system during its lifetime.

The trouble with a boatload of orgs is that their mental model and operating behavior can be represented as thus:

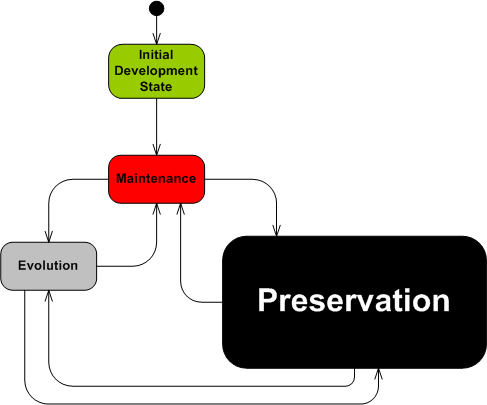

What’s missing from this crippled state machine? Why, it’s the “Preservation” state silly. In reality, it’s lurking ominously behind the scenes as the elephant in the room, but since it’s hidden from view to the unaware org, it only comes to the fore when a crisis occurs and it can’t be denied any longer. D’oh! Call in the firefighters.

Maybe that’s why Mr. “Happy Dance” Booch also sez:

… reality suggests that an inordinate percentage of software development resources are spent on software preservation.

For orgs that operate in accordance to the crippled state machine, “Preservation” is an expensive, time consuming, resource sucking sink. I hate when that happens.

Human And Automated Controllers

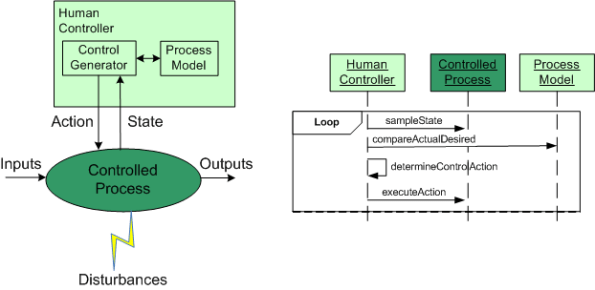

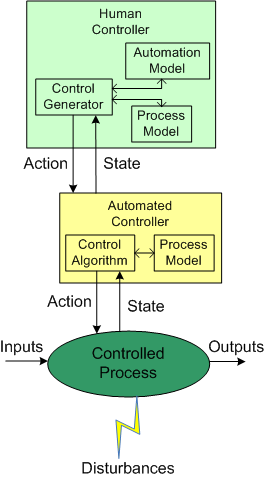

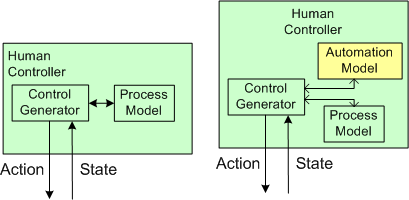

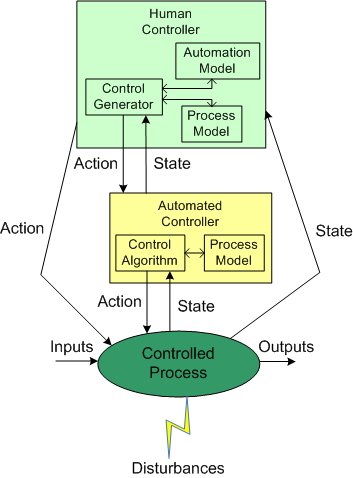

Note: The figures that follow were adapted from Nancy Leveson‘s “Engineering A Safer World“.

In the good ole days, before the integration of fast (but dumbass) computers into controlled-process systems, humans had no choice but to exercise direct control over processes that produced some kind of needed/wanted results. During operation, one or more human controllers would keep the “controlled process” on track via the following monitor-decide-execute cycle:

- monitor the values of key state variables (via gauges, meters, speakers, etc)

- decide what actions, if any, to take to maintain the system in a productive state

- execute those actions (open/close valves, turn cranks, press buttons, flip switches, etc)

As the figure below shows, in order to generate effective control actions, the human controller had to maintain an understanding of the process goals and operation in a mental model stored in his/her head.

With the advent of computers, the complexity of systems that could be, were, and continue to be built has skyrocketed. Because of the rise in the cognitive burden imposed on humans to effectively control these newfangled systems, computers were inserted into the control loop to: (supposedly) reduce cognitive demands on the human controller, increase the speed of taking action, and reduce errors in control judgment.

The figure below shows the insertion of a computer into the control loop. Notice that the human is now one step removed from the value producing process.

Also note that the human overseer must now cognitively maintain two mental models of operation in his/her head: one for the physical process and one for the (supposedly) subservient automated controller:

Assuming that the automated controller unburdens the human controller from many mundane and high speed monitoring/control functions, then the reduction in overall complexity of the human’s mental process model may more than offset the addition of the requirement to maintain and understand the second mental model of how the automated controller works.

Since computers are nothing more than fast idiots with fixed control algorithms designed by fallible human experts (who nonetheless often think they’re infallible in their domain), they can’t issue effective control actions in disturbance situations that were unforeseen during design. Also, due to design flaws in the hardware or software, automated controllers may present an inaccurate picture of the process state, or fail outright while the controlled process keeps merrily chugging along producing results.

To compensate for these potentially dangerous shortfalls, the safest system designs provide backup state monitoring sensors and control actuators that give the human controller the option to override the “fast idiot“. The human controller relies primarily on the interface provided by the computer for monitoring/control, and secondarily on the direct interface couplings to the process.

Butt The Schedule

Did you ever work on a project where you thought (or knew) the schedule was pulled out of someone’s golden butt? Unlucky you, cuz Bulldozer00 never has.

Maintenance Cycles And Teams

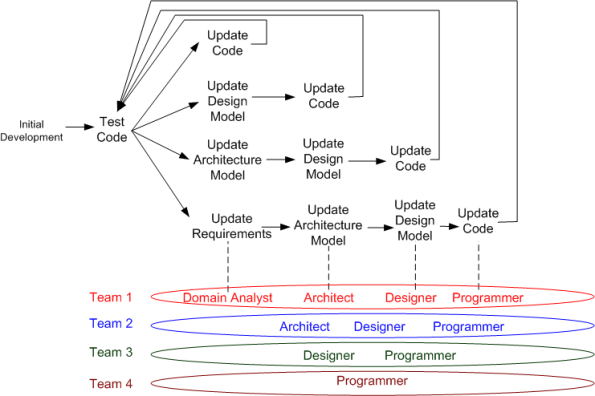

The figure below highlights the unglamorous maintenance cycle of a typical “develop-deliver-maintain” software product development process. Depending on the breadth of impact of a discovered defect or product enhancement, one of 4 feedback loops “should” be traversed.

In the simplest defect/enhancement case, the code is the only product artifact that must be updated and tested. In the most complex case, the requirements, architecture, design, and code artifacts all “should” be updated.

Of course, if all you have is code, or code plus bloated, superficial, write-once-read-never documents, then the choice is simple – update only the code. In the first case, since you have no docs, you can’t update them. In the second case, since your docs suck, why waste time and money updating them?

After the super-glorious business acquisition phase and during the mini-glorious “initial development” phase, the team is usually (but not always – especially in DYSCOs and CLORGs) staffed with the roles of domain analyst(s), architect(s), designer(s), and programmer(s). Once the product transitions into the yukky maintenance phase, the team may be scaled back and roles reassigned to other projects to cut costs. In the best case, all roles are retained at some level of budgeting – even if the total number of people is decreased. In the worst case, only the programmer(s) are kept on board. In the suicidal case, all roles but the programmer(s) are reassigned, but multiple manager type roles are added. (D’oh!)

Note that there does not have to be a one to one correspondence between a role and a person; one person can assume multiple roles. Unfortunately, the staff allocation, employee development, and reward systems in most orgs aren’t “designed” to catalyze and develop the added value of multi-role-capable people. That’s called the “employee-in-a-box” syndrome.

Filtered And Delayed

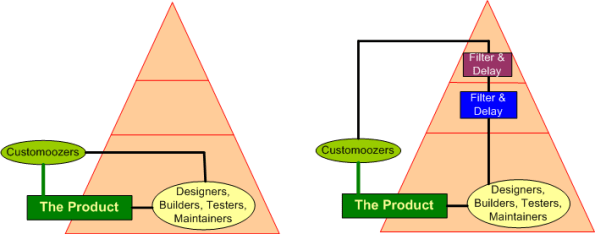

In the consumer products business, the customer and the user are one and the same – a “customoozer“. The figure below shows two system “designs” for a consumer products company. All other things equal, which one has the competitive advantage?

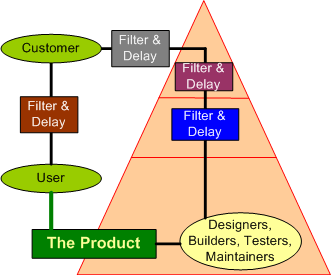

In industries like B2B and government contracting, where the customer and end user are separate entities with differing wants/needs/agendas, the typical institutional design structure is shown below:

It’s no wonder that most innovation occurs in the consumer products industry.

If it could be pulled off, do you think that the subversive system enhancement below could provide a competitive advantage?

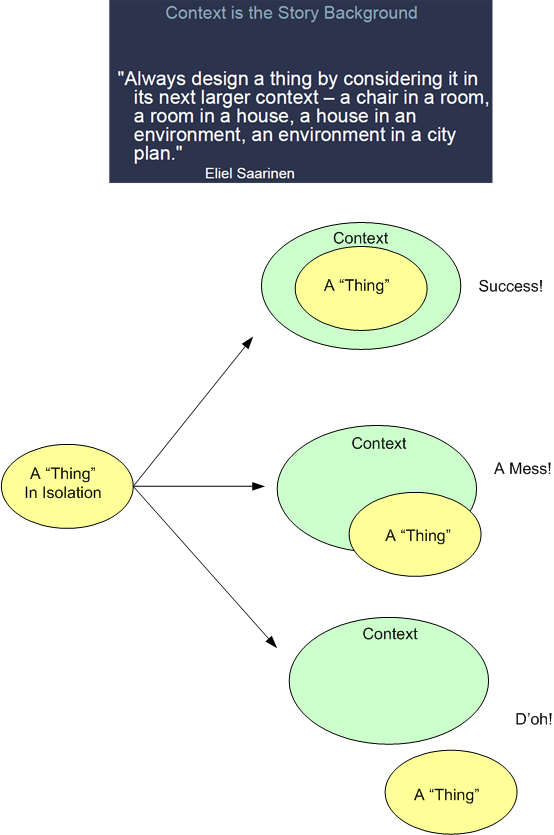

A Thing In Context

Tweet Spree!

While browsing through my online Amazon book notes, I decided to go on a short, hodgepodge tweet spree. What do you think of my spontaneous selections?

Thus, I’m a fickle, stupid, conscious (but paradoxically robotic) being living in a quantized universe who’s neither growing or developing and can’t maintain a coherent train of thought. D’oh!

Are you a member of this tribe too?

Discrete, Not Continuous?

I’m in the process of reading my seventh layman’s book on quantum physics. It’s written by quantum physicist William Bray and its long winded title is: “Quantum Physics, Near Death Experiences, Eternal Consciousness, Religion, and the Human Soul“.

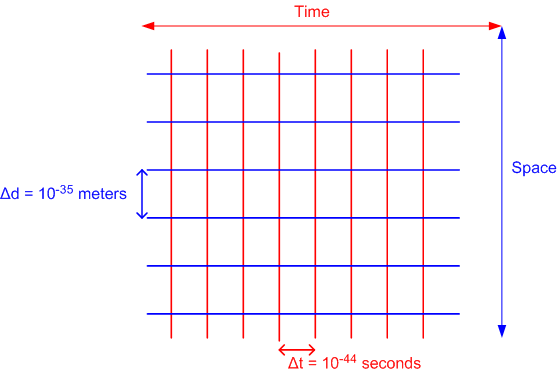

Mr. Bray writes something fascinating about space and time that I don’t recall seeing in any of the other QP books:

On a Planck scale, each moment exists in an isolated region (we call a Planck interval) of space-time existing only in the past or future to another point, and never in the present. No two things anywhere in space-time share a common present, no matter how close they are to one another, all the way down to 10**(-35) meters, or 10**(-44) seconds, apart. – William Bray (p. 31). Kindle Edition

In other words, Mr. Bray asserts that space and time are not continuous dimensions, they’re discrete. The universe is not analog, it’s digital. The movement of energy and matter in time is herky-jerky, jump-to-jump, like a motion picture. However, it “looks” smooth and continuous because of the lack of resolution and sensitivity of our woefully inadequate senses and sense-extending tools. D’oh!

Here’s what Wikipedia says about Planck time:

Theoretically, this is the smallest time measurement that will ever be possible. As of May 2010, the smallest time interval that was directly measured was on the order of 12 attoseconds (12 × 10**(−18) seconds)…. All scientific experiments and human experiences happen over billions of billions of billions of Planck times, making any events happening at the Planck scale hard to detect.

My interpretation is that we will never be able to mechanistically detect what happens between two adjacent planck time points.

So, what do you think exists between two adjacent, discrete, planck time points? Could it be that bits of infinite consciousness leak into the finite universe?

After doing some superficial research on Mr. Bray, I’ve become quite skeptical:

- There are no recommendations/testimonials from peers on the inside or backside covers of the book.

- Googling on “William Joseph Bray” doesn’t reveal that much.

- He’s got a facebook page, but I’m not a member so I didn’t get to see it.

- I discovered his web site, and his credentials seem to be too extensive to be believable.

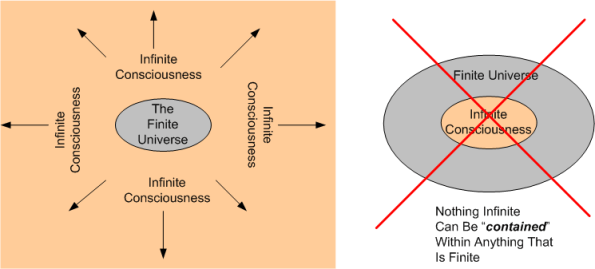

Nevertheless, the book is a fascinatingly refreshing and novel read. In a nutshell, his main theme is that consciousness is not caused by bio-chemical processes in the brain, it’s quite the opposite. Infinite consciousness lies outside of the finite physical universe and it thus paints the universe into being by “collapsing the wavefunction“. Consciousness is the ultimate observer and it creates you and me and everything else in the universe.

Don’t forget, BD00 is a self-proclaimed L’artiste, so don’t believe a word he sez. What do you think?