Archive

Vectors And Lists

In C++ programming, everybody knows that when an application requires lots of dynamic insertions into (and deletions from) an ordered sequence of elements, a linked list is much faster than a vector. Err, is it?

Behold the following performance graph that Bjarne Stroustrup presented during his keynote speech at “Going Native 2012“:

So, “WTF is up wit dat?”, you ask. Here’s what’s up wit dat:

The CPU load happens to be dominated by the time to traverse to the insertion/deletion point – and KNOT by the time to actually insert/delete the element. So, you still yell “WTF!“.

The answer to the seeming paradox is “compactness plus hardware cache“. If you’re not as stubborn and full of yourself as BD00, this answer “may” squelch the stale, flat-earth mindset that is still crying foul in your brain.

Since modern CPUs with big megabyte caches are faster at moving a contiguous block of memory than traversing a chain of links that reside outside of on-chip cache and in main memory, the results that Bjarne observed during his test should start to make sense, no?

To drive his point home, Mr. Stroustrup provided this vector-list example:

In addition to consuming more memory overhead, the likelihood that all the list’s memory “pieces” reside in on-chip cache is low compared to the contiguous memory required by the vector. Thus, each link jump requires access to slooow, off-chip, main memory.

The funny thing is that recently, and I mean really recently, I had to choose between a list and a vector in order to implement a time ordered list of up to 5000 objects. Out of curiosity, I wrote a quick and dirty little test program to help me decide which to use and I got the same result as Bjarne. Even with the result I measured, I still chose the list over the vector!

Of course, because of my entrenched belief that a list is better than a vector for insertion/deletion heavy situations, I rationalized my unassailable choice by assuming that I somehow screwed up the test program. And since I was pressed for time (so, what else is new?), I plowed ahead and coded up the list in my app. D’oh!

Update 4/21/13: Here’s a short video of Bjarne himself waxing eloquent on this unintuitive conclusion: “linked list avoidance“.

Ghastly Style

In Bjarne Stroustrup‘s keynote speech at “Going Native 2012“, he presented the standard C library qsort() function signature as an example of ghastly style that he’d wish programmers and (especially) educators would move away from:

Bjarne then presented an alternative style, which not only yields cleaner and less error prone code, but much faster performance to boot:

Bjarne blames educators, who want to stay rooted in the ancient dogma that “low level coding == optimal efficiency“, for sustaining the unnecessarily complex “void star” mindset that still pervades the C and C++ programming population.

Because they are taught the “void star” way of programming by teaching “experts“, and code of that ilk is ubiquitous throughout academia and the industry, newbie C and C++ programmers who don’t know any better strive to produce code of that “quality“. The innocent thinking behind the motivation is: “that’s the way most people write code, so it must be good and kool“.

I can relate to Mr. Stroustrup’s exasperation because it took perfect-me a long time to overcome the “void star” mental model of the world. It was so entrenched in my brain and oft practiced that I still unconsciously drift back into my old ways from time to time. It’s like being an alcoholic where constant self-vigilance and an empathic sponsor are required to keep the demons at bay. Bummer.

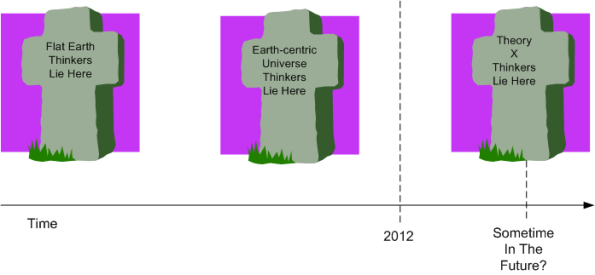

A Succession Of Funerals

Science advances one funeral at a time. – Max (walk the freakin’) Planck

As implied by the quote above, new and more effective ideas/techniques/practices/methods take hold only when the old guard, which fiercely defends the status quo regardless of the consequences, “dies” off and a new generation takes over.

Frederick Winslow Taylor, who many people credit as the father of “theory X” management science (workers are lazy, greedy, and dumb), died in 1915. Even though it was almost 100 years ago, theory X management mindsets and processes are still deeply entrenched in almost all present day institutions – with no apparent end in sight.

Oh sure, many so-called enlightened companies sincerely profess to shun theory X and embrace theory Y (workers are self-motivated, responsible, and trustworthy), but when you look carefully under the covers, you’ll find that policies and procedures in big institutions are still rooted in absolute control, mistrust, and paternalism. Because, because, because…, that’s the way it has to be since a corollary to theory X thinking is that chaos and inefficiency would reign otherwise.

Alas, you don’t have to look or smell beneath the covers – and maybe you shouldn’t. You can just (bull)doze(r00) on off in blissful ignorance. If you actually do explore and observe theory X in action under a veneer of theory Y lip service, don’t be so hard on yourself or “them“: 1) there’s nothing you can do about it, 2) they’re sincerely trying their best, and 3) “they know not what they do“.

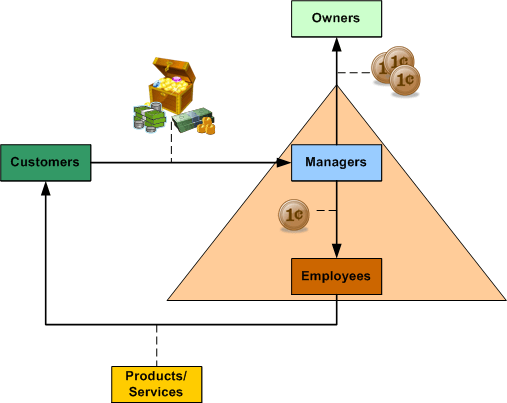

The Principle Objective

The principle objective of a system is what it does, not what its designers, controllers, and/or maintainers say it does. Thus, the principle objective of most corpocratic systems is not to maximize shareholder value, but to maximize the standard of living and quality of work life of those who manage the corpocracy…

The principal objective of corporate executives is to provide themselves with the standard of living and quality of work life to which they aspire. – Addison, Herbert; Ackoff, Russell (2011-11-30). Ackoff’s F/Laws: The Cake (Kindle Locations 1003-1004). Triarchy Press. Kindle Edition.

It seems amazing that the non-executive stakeholders of these institutions don’t point out this discrepancy when the wheels start falling off – or even earlier, when the wheels are still firmly attached. Err, on second thought, it’s not amazing. The 100 year old “system” demands that silence is expected on the matter, no?

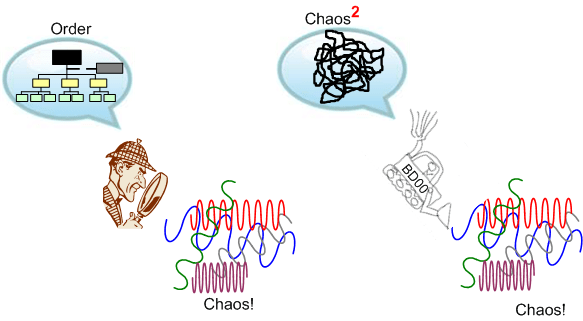

Order Imposition

When functioning correctly, the purpose of “rational thought” is to impose the illusion of order on chaos. As you well know by now, BD00, whose thoughts are dysfunctional, irrationally prefers chaos-squared over order. Actually, he doesn’t prefer it. It’s just the way it is. D’oh!

Fierce Transparency

I’ve been trying to figure out why I admire Zappos.com (I know, I know, they had a nasty security breach recently), Semco, and HCL Technologies so much. Since I have a burning need to understand “why“, I’ve concocted at least one reason: Tony Hsieh, Ricardo Semler, and Vineet Nayar ensure that fierce transparency is practiced within their companies and all their “initiatives” are rooted there.

Working in an environment without transparency is like trying to solve a jigsaw puzzle without knowing what the finished picture is supposed to look like. – Vineet Nayar. Employees First, Customers Second: Turning Conventional Management Upside Down (Kindle Location 547). Kindle Edition.

Of course, I’m making up all this transparency stuff, but hey, it reinforces my weltanschauung (<- I had to look up the spelling a-freakin-gain!). That’s what humans do to give themselves comfort. No?

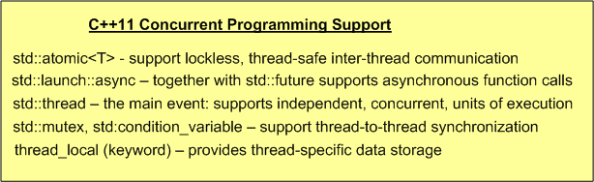

Concurrency Support

Assuming that I remain a lowly, banana-eating programmer and I don’t catch the wanna-be-uh-manager-supervisor-director-executive fever, I’m excited about the new features and library additions provided in the C++11 standard.

Specifically, I’m thrilled by the support for “dangerous” multi-threaded programming that C++11 serves up.

For more info on the what, why, and how of these features and library additions, check out Scott Meyers’ pre-book training package, Anthony Williams’ new book, and Bjarne’s C++11 FAQ page.

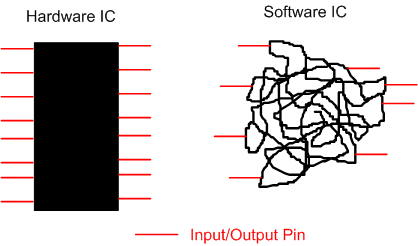

HW And SW ICs

Because software-intensive system development labor costs are so high, the holy grail of cost reduction is “reusability” at all levels of the stack; reusable requirements, reusable architectures, reusable designs, reusable source code. If you can compose a system from a set of pre-built components instead of writing them (over and over again) from scratch, then you’ll own a huge competitive advantage over your rivals who reinvent the wheel over and over again.

One of the beefs I’ve heard over the years is “why can’t you software weenies be like the hardware guys“. They mastered “reusability” via integrated circuits (IC) a looong time ago.

One difference between a hardware IC and a software IC is that the number of input/output (IO) pins on a physical chip is FIXED. Because of the malleability and ephemeral nature of software, the number of IO “pins” is in constant flux – usually increasing over time as the software is created.

Even when a software IC is considered “finished and ready for reuse” (LOL), unlike a hardware IC, the documentation on how to use the dang thing is almost always lacking and users have to pour through 1000s of lines of code to figure out if and how they can integrate the contraption into their own quagmire.

Alas, the software guys can master “reusability” like the hardware guys, but the discipline and time required to do so just isn’t there. With the increasing size of software systems being built today, the likelihood of software guys and their managers overcoming the discipline-time double whammy can be summarized as “not very“.

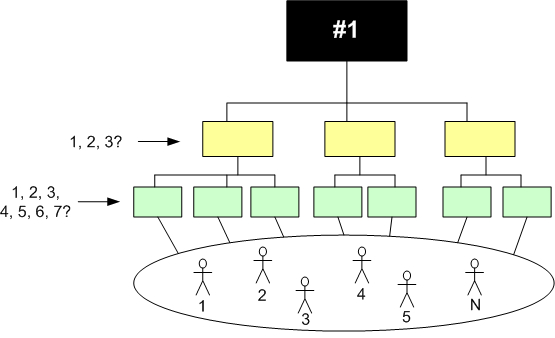

Reeking Of Rank

In the 20th century (remember what it was like way back when?), “neutron” Jack Welch unabashedly, successfully, and transparently used a ranking system to catapult GE to the top of the financial world by ex-communicating the bottom 10% on a yearly(?) schedule.

When leadership teams make a corpo-wide policy change, they do so in a sincere attempt to improve some performance metric in the org without inflicting too much collateral damage. For example, take the above policy of “ranking” employees. Orgs that rank their employees may “assert” that rankings will increase engagement, morale, and let people “know where they stand” in relation to their peers.

That’s all fine and dandy as long as the ranking system applies equally to each and every level in the org – especially if it’s asserted to be a guaranteed slam dunk for increasing employee engagement . Hell, if it’s a no-brainer, then why exclude the supervisor, manager, director, and C-level layers? After all they’re “employees” too, no?

I wonder if #1 Jack Welch ranked his direct reports and gracefully escorted his bottom 10% out the door every year?

Process Delays And Variety Suppression

Even though it’s unrealistically ideal and unworkable, I give you this zero-overhead value and wealth creation system as a point of reference:

For speculative comparison to the idealized design, I give you this system “enhancement“:

For the ultimate delay-inducing, variety-suppressing, and assimilating borg, I give you this “optimal” design:

Over time, as an org unconsciously but almost assuredly morphs into a borg, the existing delay-inducing “value-added overhead processes” grow bigger, and more of them are inserted into the pipeline for sincere but misguided performance-increasing reasons. To add insult to injury, more and thicker variety-suppression control channels are imposed on the pipeline from above. If this rings a bell with you, then it’s pure coincidence, because like all other delusionary BD00 posts, it’s totally made up.