Archive

Structure, Work, Entropy

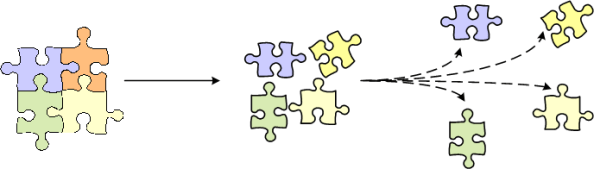

Entropy can be interpreted as a measure of chaos, or disorder. The second law of thermodynamics asserts that entropy increases with the passage of time. Tick, tock, tick, tock. The universe is constantly but surely on the move toward randomness.

As the universe unfolds in a continuous and creative dance, it temporarily suspends its own law of increasing entropy. It spontaneously forms new structures while others are simultaneously disintegrating.

As human beings, we are of the universe and thus, we also possess the awesome power to create. It takes structure plus work to create and, maybe more importantly, sustain something of value. The best we can do is temporarily arrest the growth in entropy by applying structure and performing the work required to keep the structures that we create in tact. Eventually, the inexorable rise in entropy wins and our creations disintegrate. It is what it is.

Mista Level

“Design is an intimate act of communication between the designer and the designed” – W. L. Livingston

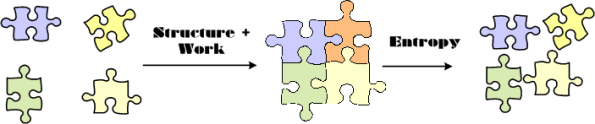

I’m currently in the process of developing an algorithm that is required to accumulate and correlate a set of incoming, fragmented messages in real-time for the purpose of producing an integrated and unified output message for downstream users.

The figure below shows a context diagram centered around the algorithm under development. The input is an unending, 24×7, high speed, fragmented stream of messages that can exhibit a fair amount of variety in behavior, including lost and/or corrupted and/or misordered fragments. In addition, fragmented message streams from multiple “sources” can be interlaced with each other in a non-deterministic manner. The algorithm needs to: separate the input streams by source, maintain/update an internal real-time database that tracks all sources, and periodically transmit source-specific output reports when certain validation conditions are satisfied.

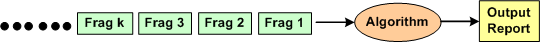

After studying literally 1000s of pages of technical information that describe the problem context that constrains the algorithm, I started sketching out and “playing” with candidate algorithm solutions at an arbitrary and subjective level of abstraction. Call this level of abstraction level 0. After looping around and around in the L0 thought space, I “subjectively decided” that I needed a second, more detailed but less abstract, level of definition, L1.

After maniacally spinning around within and between the two necessarily entangled hierarchical levels of definition, I arrived at a point of subjectively perceived stability in the design.

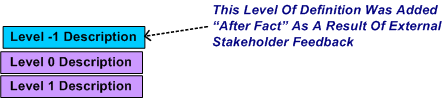

After receiving feedback from a fellow project stakeholder who needed an even more abstract level of description to communicate with other, non-development stakeholders, I decided that I mista level. However, I was able to quickly conjure up an L-1 description from the pre-existing lower level L0 and L1 descriptions.

Could I have started the algorithm development at L-1 and iteratively drilled downward? Could I have started at L1 and iteratively “syntegrated” upward? Would a one level-only (L-1, L0, or L1) specification be sufficient for all downstream stakeholders to use? The answers to all these questions, and others like them are highly subjective. I chose the jagged and discontinuous path that I traversed based on real-time situational assessment in the now, not based on some one-size-fits-all, step-by-step corpo approved procedure.

Wide But Shallow, Narrow But Deep

I just “finished” (yeah,that’s right –> 100% done (LOL!)) exploring, discovering, defining, and specifying, the functional changes required to add a new feature to one of our pre-existing, software-intensive products. I’m currently deep in the trenches exploring and discovering how to specify a new set of changes required to add a second related feature to the same product. Unlike glamorous “Greenfield” projects where one can start with a blank sheet of paper, I’m constrained and shackled by having to wrestle with a large and poorly documented legacy system. Sound familiar?

The extreme contrast between the demands of the two project types is illuminating. The first one required a “wide but shallow” (WBS) analysis and synthesis effort while the current one requires a “narrow but deep” (NBD) effort. Both types of projects require long periods of sustained immersion in the problem domain, so most (all?) managers won’t understand this post. They’re too busy running around in ADHD mode acting important, goin’ to endless agenda-less meetings, and puttin’ out fires (that they ignited in the first place via their own neglect, ignorance, and lack of listening skills). Gawd, I’m such a self-righteous and bad person obsessed with trashing the guild of management 🙂 .

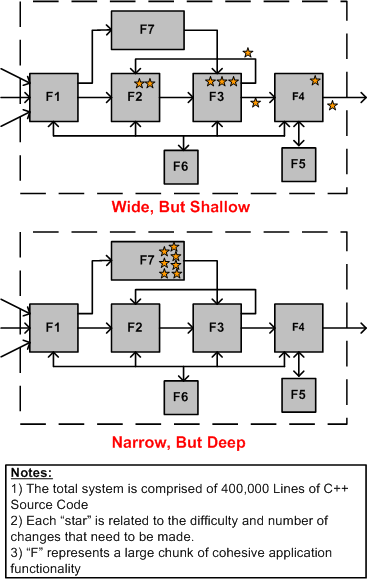

The figure below highlights the difference between WBS and NBD efforts for a “hypothetical” product enhancement project.

In WBS projects, the main challenge is hunting down all the well hidden spots that need to be changed within the behemoth. Missing any one of these change-spots can (and usually does) eat up lots of time and money down the road when the thing doesn’t work and the product team has to find out why. In NBD projects, the main obstacle to overcome is the acquisition of the specialized application domain knowledge and expertise required to perform localized surgery on the beast. Since the “search” for the change/insertion spots of an NBD effort is bounded and localized, an NBD effort is much lower risk and less frustrating than a WBS effort. This is doubly true for an undocumented system where studying massive quantities of source code is the only way to discover the change points throughout a large system. It’s also more difficult to guesstimate “time to completion” for a WBS project than it is for an NBD project. On the other hand, much more learning takes place in a WBS project because of the breadth of exposure to large swaths of the code base.

Assuming that you’re given a choice (I know that this assumption is a sh*tty one), which type of project would you choose to work on for your next assignment; a WBS project, or an NBD project? No cheatin’ is allowed by choosing “neither” 😉 .

Functional Allocation I

Some system engineering process descriptions talk about “Functional Allocation” as being one of the activities that is performed during product development. So, what is “Functional Allocation”? Is it the allocation of a set of interrelated functions to a set of “something else”? Is it the allocation of a set of “something else” to a set of function(s)? Is it both? Is it done once, or is it done multiple times, progressing down a ladder of decreasing abstraction until the final allocation iteration is from something abstract to something concrete and physically measurable?

I hate the word “allocation”. I prefer the word “design” because that’s what the activity really is. Given a specific set of items at one level of abstraction, the “allocator” must create a new set of items at the next lower level of abstraction. That seems like design to me, doesn’t it? Depending on the nature and complexity of the product under development, conjuring up the next lower level set of items may be very difficult. The “allocator” has an infinite set of items to consciously choose from and purposefully interconnect. “Allocation” implies a bland, mechanistic, and deterministic procedure of apportioning one set of given items to another different set of given items. However, in real life only one set of items is “given” and the other set must be concocted out of nowhere.

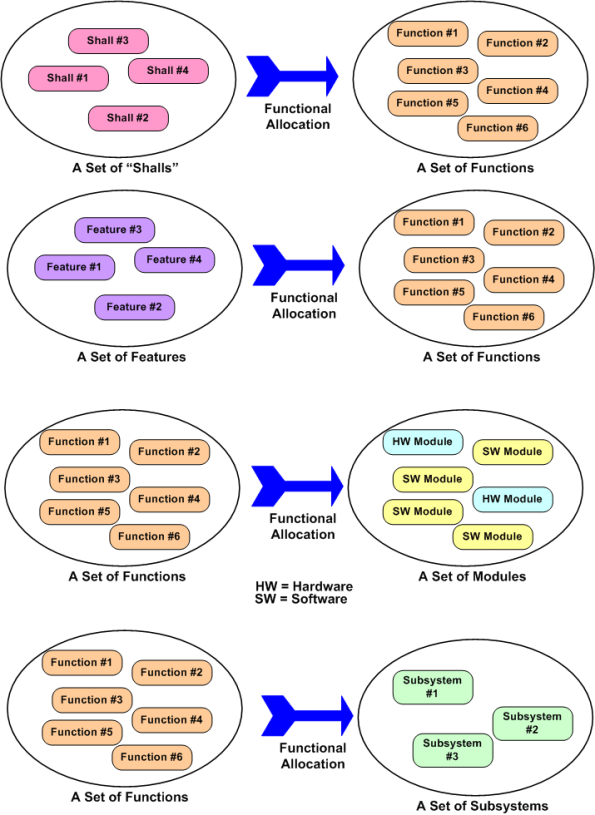

The figure below shows four different types of functional allocations: shalls-to-functions, features-to-functions, functions-to-modules, and functions-to-subsystems. Each allocation example has a set of functions involved. In the first two examples, the set of functions have been allocated “to”, and in the last two examples, the set of functions have been allocated “from”.

So, again I ask, what is functional allocation? To managers who love to remove people from the loop and automate every activity they possibly can to reduce costs, can human beings ever be removed from the process of functional allocation? If you said no, then what can you do to help make the process of allocation more efficient?

Contained And Container

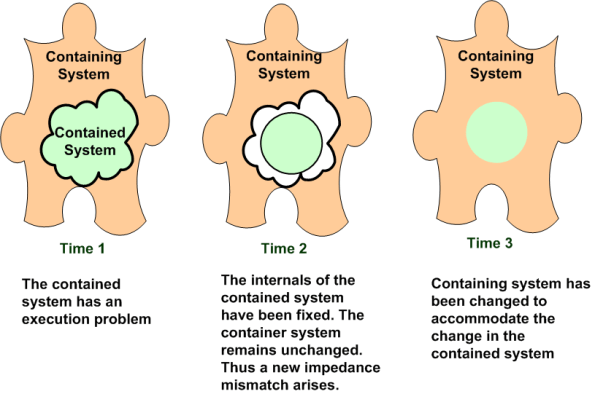

In Russell Ackoff‘s excellent book titled Idealized Design , he talks about container and contained systems. He essentially states that optimizing the contained system without changing the container system is a failure in waiting. The figure below depicts what often happens when a change agent succeeds in improving the contained system without consideration of the container system.

At time 1, the change agent realizes that there is an efficiency problem within the contained system. After an epic battle against the forces conspiring to keep the status quo intact, he/she succeeds in smoothing out the operation of the contained system at time 2. However, since the container system was neglected, it still operates according to the old rules and interfaces of time 1. Thus, an impedance mismatch between the container and contained system interface has appeared. This impedance mismatch can cause organizational performance to be worse than before the change (the cure is worse than the disease) to the contained system!

In an ideal system change effort, both the container and contained systems are improved. Done correctly, a smooth and high performing system-of-systems, like the above model at time 3, can be achieved. Compare the smooth circular integrated interface at time 3 with the previous inefficient and cloudy interfaces of the previous 2 times.