Archive

Geeks Bearing Formulas

Since there are so few champions that have successfully leveraged simplicity to paradoxically conquer complexity, and he is one of them, legendary investor Warren Buffet is high on my hero and mentor list. Check these jewels out:

All I can say is, beware of geeks … bearing formulas. – Warren Buffet

The business schools reward difficult complex behavior more than simple behavior, but simple behavior is more effective. – Warren Buffet

The Commencement Of Husbandry

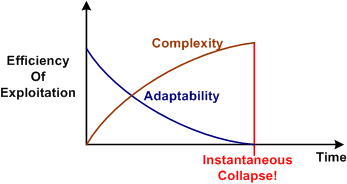

The figure below was copied over from yesterday’s post. Derived from Joseph Tainter’s “The Collapse of Complex Societies”, it simply illustrates that as the complexity of a social organizational structure necessarily grows to support the group’s own growth and survival needs, the adaptability of the structure decreases. The flat and loosely coupled institutional structures originally created by the group’s elites (with the willing consent of the commoners) start hierarchically rising and coalescing into a rigid, gridlocked monolith incapable of change. At the unknown future point in time where an external unwanted disturbance exceeds the group’s ability to handle it with its existing complex problem solving structures and intellectual wizardry, the whole tower of Babel comes tumbling down since the monolith is incapable of the alternative – adapting to the disturbance via change. Poof!

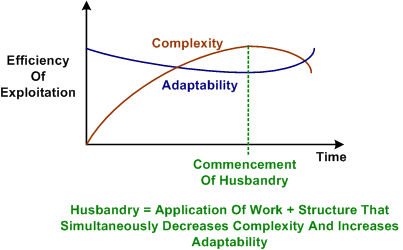

According to Tainter, once the process has started, it is irreversible. But is it? Check out the figure below. In this example, the group leadership not only awakens to the dooms day scenario, it commences the process of husbandry to reverse the process by:

- Re-structuring (not just tinkering and rearranging the chairs) for increased adaptability – by simplifying.

- Scouring the system for, and delicately removing useless, appendix-like substructures.

- Discovering the pockets of fat that keep the system immobile and trimming them away.

- Loosening dependencies between substructures and streamlining the interactions between those substructures by jettisoning bogus processes and procedures.

- Installing effective, low lag time, internal feedback loops and external sensors that allow the system to keep moving forward and probing for harmful external disturbances.

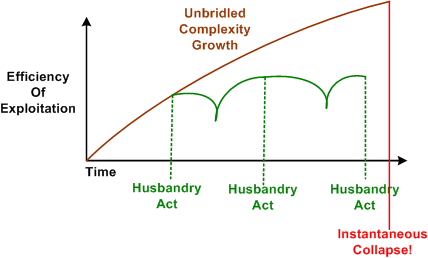

If the execution of husbandry is boldly done right (and it’s a big IF for humongous institutions with a voracious appetite for resources), an effectively self-controlled and adaptable production system will emerge. Over time, and with sustained periodic acts of husbandry to reduce complexity, the system can prosper for the long haul as shown in the figure below.

Morally Irresponsible Stooges

In the first place, it is clear that the degradation of the position of the scientist as an independent worker and thinker to that of a morally irresponsible stooge in a science factory has proceeded even more rapidly and devastatingly than I had expected. The subordination of those who ought to think to those who have the administrative power is ruinous to the morale of the scientist, and quite to the same extent, the objective scientific output of the nation. – Norbert Wiener.

By stealing Norby’s quote and replacing a few words, we can make up this nasty, vitriolic, equivalent passage (cuz I like to make stuff up):

In the first place, it is clear that the degradation of the position of the product creator/developer as an independent worker and thinker to that of a morally irresponsible stooge in a corpocracy has proceeded even more rapidly and devastatingly than I had expected. The subordination of those who ought to think to those who have the bureaucratic power is ruinous to the morale of the wealth creator, and quite to the same extent, the productive output of the CCF. – Bulldozer00.

These days, exploiters are more valued than explorers and makers. In the good ole days (boo hoo!) and in most present day startup companies, the exploiters were/are also the explorers and makers, but because of a lack of respect and support for the species, the multi-disciplined systems thinker and doer has gone the way of the dinosaur. It’s only getting worse because as complexity grows, the need for renaissance men and women to harness the increase in complexity’s dark twin, entropy, is accelerating.

Not Baffling

“Increasingly, people seem to misinterpret complexity as sophistication, which is baffling—the incomprehensible should cause suspicion rather than admiration. Possibly this trend results from a mistaken belief that using a somewhat mysterious device confers an aura of power on the user.” – Niklaus Wirth

Niklaus, it’s not baffling. People do it because, in a society that adores academic intelligence over all else, they don’t want to look and feel stupid in front of others. By acting as though they admire an incomprehensible monstrosity that they don’t understand, the people around them (especially the creators of the untenable complexity) will think they are smart and sophisticated too.

Regardless of whether the “misinterpretation” happens consciously or unconsciously, it’s ego driven. I know this because I’ve done it many times…….. both consciously and unconsciously.

“The intuitive mind is a sacred gift and the rational mind is a faithful servant. We have created a society that honors the servant and has forgotten the gift.” – Albert Einstein

Powerful Tools

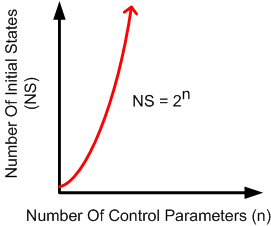

Cybernetician W. Ross Ashby‘s law of requisite variety states that “only variety can effectively control variety“. Another way of stating the law is that in order to control an innately complex problem with N degrees of freedom, a matched solution with at least N degrees of freedom is required. However, since solutions to hairy socio-technical problems introduce their own new problems into the environment, over-designing a problem controller with too many extra degrees of freedom may be worse than under-designing the controller.

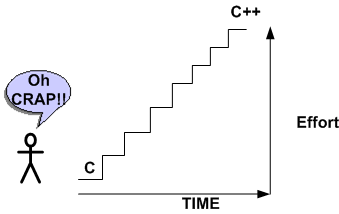

In an analogy with Ashby’s law, it takes powerful tools to solve powerful problems. Using a hammer where only a sledgehammer will get the job done produces wasted effort and leaves the problem unsolved. However, learning how to use and wield powerful new tools takes quite a bit of time and effort for non-genius people like me. And most people aren’t willing to invest prolonged time and effort to learn new things. Relative to adolescents, adults have an especially hard time learning powerful new tools because it requires sustained immersion and repetitive practice to become competent in their usage. That’s why they typically don’t stick with learning a new language or learning how to play an instrument.

In my case, it took quite a bit of effort and time before I successfully jumped the hurdle between the C and C++ programming languages. Ditto for the transition from ad-hoc modeling to UML modeling. These new additions to my toolbox have allowed me to tackle larger and more challenging software problems. How about you? Have you increased your ability to solve increasingly complex problems by learning how to wield commensurately new and necessarily complex tools and techniques? Are you still pointing a squirt gun in situations that cry out for a magnum?

Obfuscators And Complexifiers

Since I’m pretty unsuccessful at it, one of my pet peeves is having to deal with obfuscators and complexifiers (OAC). People who chronically exhibit these behaviors serve as formidable obstacles to progress by preventing the right info from getting to the right people at the right time. “They” do so either because they’re innocently ignorant or because they’re purposefully trying to camouflage their lack of understanding on the topic of discussion for fear of “looking bad”. I have compassion for the former, but great disdain for the latter – blech!

The condundrum is, in CCH corpocracies, there’s an unwritten law that says DICs aren’t “allowed” to publicly expose purposeful OACs if the perpetrators are above a certain untouchable rank in the infallible corpo command chain. In extremely dysfunctional mediocracies, no one is allowed to call any purposeful OAC out onto the mat, regardless of where the dolt is located in the tribal caste system. In either case, retribution for the blasphemous transgression is always swift, effective, and everlasting. Bummer.

CCP

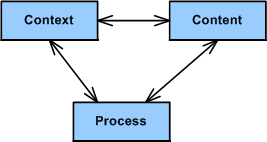

Relax right wing meanies, it’s not CCCP. It’s CCP, and it stands for Context, Content, and Process. Context is a clear but not necessarily immutable definition of what’s in and what’s out of the problem space. Content is the intentionally designed static structure and dynamic behavior of the socio-technical solution(s) to be applied in an attempt to solve the problem. Process is the set of development activities, tasks, and toolboxes that will be used to pre-test (simulate or emulate), construct, integrate, post-test, and carefully introduce the solution into the problem space. Like the other well-known trio, schedule-cost-quality, the three CCP elements are intimately coupled and inseparable. Myopically focusing on the optimization of one element and refusing to pay homage to the others degrades the performance of the whole.

I first discovered the holy trinity of CCP many years ago by probing, sensing, and interpreting the systems work of John Warfield via my friend, William Livingston. I’ve been applying the CCP strategy for years to technical problems that I’ve been tasked to solve.

You can start using the CCP problem solving process by diving into any of the three pillars of guidance. It’s not a neat, sequential, step-by-step process like those documented in your corpo standards database (that nobody follows but lots of experts are constantly wasting money/time to “improve”). It’s a messy, iterative, jagged, mistake discovering and correcting intellectual endeavor.

I usually start using CCP by spending a fair amount of time struggling to define the context; bounding, iterating and sketching fuzzy lines around what I think is in and what is out of scope. Next, I dive into the content sub-process; using the context info to conjure up solution candidates and simulate them in my head at the speed of thought. The first details of the process that should be employed to bring the solution out of my head and into the material world usually trickle out naturally from the info generated during the content definition sub-process. Herky-jerky, iterative jumping between CCH sub-processes, mental simulation, looping, recursion, and sketching are key activities that I perform during the execution of CCP.

What’s your take on CCP? Do you think it’s generic enough to cover a large swath of socio-technical problem categories/classes? What general problem solving process(es) do you use?

The Requirements Landscape

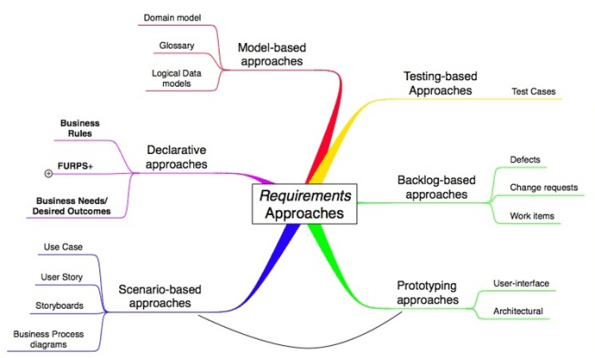

Kurt Bittner, of Ivar Jacobson International, has written a terrific white paper on the various approaches to capturing requirements. The mind map below was copied and pasted from Kurt’s white paper.

In his paper, Bittner discusses the pluses and minuses of each of his defined approaches. For the text-based “declarative” approaches, he states the pluses as: “they are familiar” and “little specialized training” is needed to write them. Bittner states the minuses as:

- They are “poor at specifying flow behavior”

- It’s “hard to connect related requirements”

IMHO, as systems get more and more complex, these shortcomings lead to bigger and bigger schedule, cost, and quality shortfalls. Yet, despite the advances in requirements specification methodologies nicely depicted in Bittner’s mind map, defense/aerospace contractors and their bureaucratic government customers seem to be forever married to the text-based “shall” declarative approach of yesteryear. Dinosaur mindsets, the lack of will to invest in corpo-wide training, and expensive past investments in obsolete and entrenched text-based requirements tools have prevented the newer techniques from gaining much traction. Do you think this encrusted way of specifying requirements will change anytime soon?

Complexity Explosion

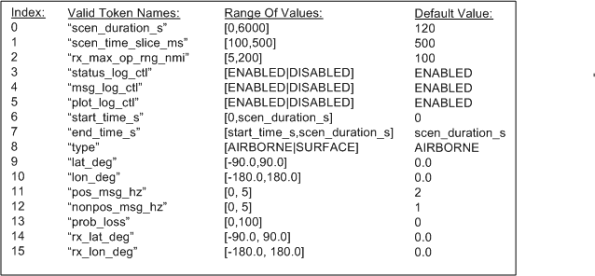

I’m in the process of writing a C++ program that will synthesize and inject simulated inputs into an algorithm that we need to test for viability before including it in one of our products. I’ve got over 1000 lines of code written so far, and about another 1000 to go before I can actually use it to run tests on the algorithm. Currently, the program requires 16 multi-valued control inputs to be specified by the user prior to running the simulation. The inputs define the characteristics of the simulated input stream that will be synthesized.

Even though most of the control parameters are multi-valued, assume that they are all binary-valued and can be set to either “ON” or “OFF” . It then follows that there are 2**16 = 65536 possible starting program states. When (not if) I need to add another control parameter to increase functionality, the number of states will soar to 131,072, if, and only if, the new parameter is not multi-valued. D’oh! OMG! Holy shee-ite!

Is it possible to setup the program, run the program, and evaluate its generated outputs against its inputs for each of these initial input scenarios? Never say never, but it’s not economically viable. Even if the setup and run activities can be “automated”, manually calculating the expected outputs and comparing the actual outputs against the expected outputs for each starting state is impractical. I’d need to write another program to test the program, and then write another program to test the program that tests the program that tests the first program. This recursion would go on indefinitely and errors can be made at any step of the way. Bummer.

No matter what the “experts” who don’t have to do the work themselves have to say, in programming situations like this, you can’t “automate” away the need for human thought and decision making. Based on knowledge, experience, and more importantly, fallible intuition, I have to judiciously select a handful of the 65536 starting states to run. I then have to manually calculate the outputs for each of these scenarios, which is impractical and error-prone because the state machine algorithm that processes the inputs is relatively dense and complicated itself. What I’m planning to do is visually and qualitatively scan the recorded outputs of each program run for a select few of the 65536 states that I “feel” are important. I’ll intuitively analyze the results for anomalies in relation to the 16 chosen control input values.

Got a better way that’s “practical”? I’m all ears, except for a big nose and a bald head.

“Nothing is impossible for the man who doesn’t have to do it himself.” – A. H. Weiler

Mista Level

“Design is an intimate act of communication between the designer and the designed” – W. L. Livingston

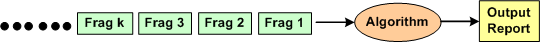

I’m currently in the process of developing an algorithm that is required to accumulate and correlate a set of incoming, fragmented messages in real-time for the purpose of producing an integrated and unified output message for downstream users.

The figure below shows a context diagram centered around the algorithm under development. The input is an unending, 24×7, high speed, fragmented stream of messages that can exhibit a fair amount of variety in behavior, including lost and/or corrupted and/or misordered fragments. In addition, fragmented message streams from multiple “sources” can be interlaced with each other in a non-deterministic manner. The algorithm needs to: separate the input streams by source, maintain/update an internal real-time database that tracks all sources, and periodically transmit source-specific output reports when certain validation conditions are satisfied.

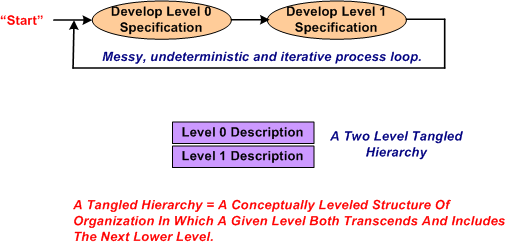

After studying literally 1000s of pages of technical information that describe the problem context that constrains the algorithm, I started sketching out and “playing” with candidate algorithm solutions at an arbitrary and subjective level of abstraction. Call this level of abstraction level 0. After looping around and around in the L0 thought space, I “subjectively decided” that I needed a second, more detailed but less abstract, level of definition, L1.

After maniacally spinning around within and between the two necessarily entangled hierarchical levels of definition, I arrived at a point of subjectively perceived stability in the design.

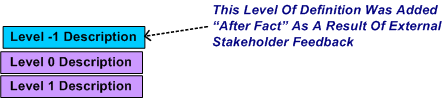

After receiving feedback from a fellow project stakeholder who needed an even more abstract level of description to communicate with other, non-development stakeholders, I decided that I mista level. However, I was able to quickly conjure up an L-1 description from the pre-existing lower level L0 and L1 descriptions.

Could I have started the algorithm development at L-1 and iteratively drilled downward? Could I have started at L1 and iteratively “syntegrated” upward? Would a one level-only (L-1, L0, or L1) specification be sufficient for all downstream stakeholders to use? The answers to all these questions, and others like them are highly subjective. I chose the jagged and discontinuous path that I traversed based on real-time situational assessment in the now, not based on some one-size-fits-all, step-by-step corpo approved procedure.