Archive

One Step Forward, N-1 Steps Back

For the purpose of entertainment, let’s assume that the following 3-component system has been deployed and is humming along providing value to its users:

Next, assume that a 4-sprint enhancement project that brought our enhanced system into being has been completed. During the multi-sprint effort, several features were added to the system:

OK, now that the system has been enhanced, let’s say that we’re kicking back and doing our project post-mortem. Let’s look at two opposite cases: the Ideal Case (IC) and the Worst Case (WC).

First, the IC:

During the IC:

- we “embraced” change during each work-sprint,

- we made mistakes, acknowledged and fixed them in real-time (the intra-sprint feedback loops),

- the work of Sprint X fed seamlessly into Sprint X+1.

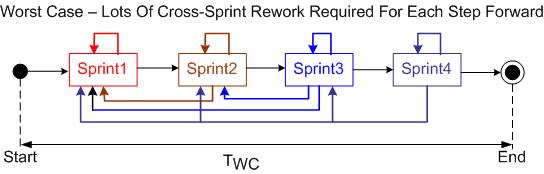

Next, let’s look at what happened during the WC:

Like the IC, during each WC work-sprint:

- we “embraced” change during each work-sprint,

- we made mistakes, acknowledged and fixed them in real-time (the intra and inter-sprint feedback loops),

- the work of Sprint X fed seamlessly into Sprint X+1.

Comparing the IC and WC figures, we see that the latter was characterized by many inter-sprint feedback loops. For each step forward there were N-1 steps backward. Thus, TWC >> TIC and $WC >> $IC.

WTF? Why were there so many inter-sprint feedback loops? Was it because the feature set was ill-defined? Was it because the in-place architecture of the legacy system was too brittle? Was it because of scope creep? Was it because of team-incompetence and/or inexperience? Was it because of management pressure to keep increasing “velocity” – causing the team to cut corners and find out later that they needed to go back often and round those corners off?

So, WTF is the point of this discontinuous, rambling post? I dunno. As always, I like to make up shit as I go.

After-the-fact, I guess the point can be that the same successes or dysfunctions can happen during the execution of an agile project or during the execution of a project executed as a series of “mini-waterfalls“:

- ill-defined requirements/features/user-stories/function-points/use-cases (whatever you want to call them)

- working with a brittle, legacy, BBOM

- team incompetence/inexperience

- scope creep

- schedule pressure

Ultimately, the forces of dysfunction and success are universal. They’re independent of methodology.

The Least Used Option

“We need to estimate how many people we need, how much time, and how much money. Then we’ll know when we’re running late and we can, um, do something.”

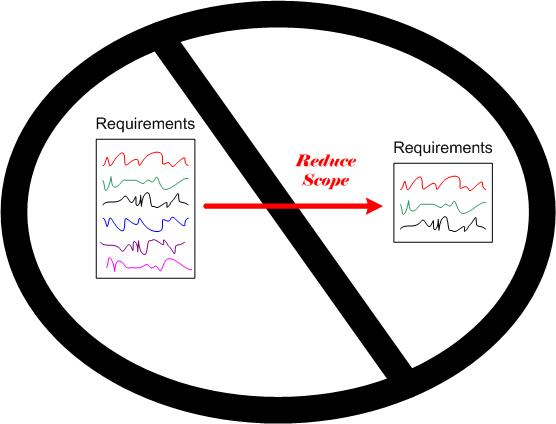

OK, assuming we are indeed running late and, as ever, “schedule is king“. WTF are our options?

- We can add more people.

- We can explicitly or (preferably) implicitly impose mandatory overtime; paid or (preferably) unpaid.

- We can reduce the project scope.

The least used option, because it’s the only one that would put management in an uncomfortable position with the customer(s), is the last one. This, in spite of the fact that it is the best option for the team’s well being over both the short and long term.

Connected By Assumptions

“The connections between modules are the assumptions which the modules make about each other.” – David Parnas

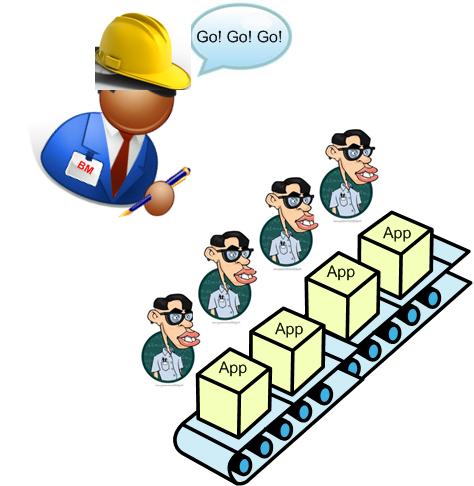

Agile Software Factories

Agile Overload

Since I buy a lot of Kindle e-books, Amazon sends me book recommendations all the time. Check out this slew of recently suggested books:

My fave in the list is “Agile In A Flash“. I’d venture that it’s written for the ultra-busy manager on-the-go who can become an agile expert in a few hours if he/she would only buy and read the book. What’s next? Agile Cliff notes?

“Agile” software development has a lot going for it. With its focus on the human-side of development, rapid feedback control loops to remove defects early, and its spirit of intra-team trust, I can think of no better way to develop software-intensive systems. It blows away the old, project-manager-is-king, mechanistic, process-heavy, and untrustful way of “controlling” projects.

However, the word “agile” has become so overloaded (like the word “system“) that….

Everyone is doing agile these days, even those that aren’t – Scott Ambler

Gawd. I’m so fed up with being inundated with “agile” propaganda that I can’t wait for the next big silver bullet to knock it off the throne – as long as the new king isn’t centered around the recently born, fledgling, SEMAT movement.

What about you, dear reader? Do you wish that the software development industry would move on to the next big thingy so we can get giddily excited all over again?

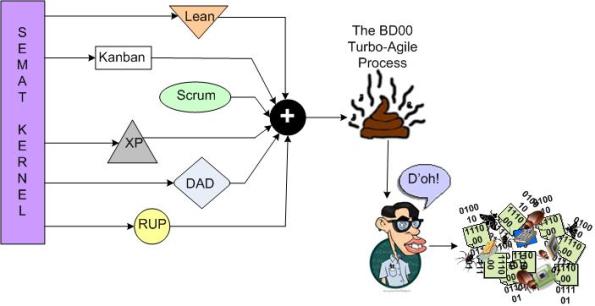

Going Turbo-Agile

I’m planning on using the state of the art SEMAT kernel to cherry-pick a few “best practices” and concoct a new, proprietary, turbo-agile software development process. The BD00 Inc. profit deluge will come from teaching 1 hour certification courses all over the world for $2000 a pop. To fund the endeavor, I’m gonna launch a Kickstarter project.

What do you think of my slam dunk plan? See any holes in it?

Alternative Considerations

Before you unquestioningly accept the gospel of the “evolutionary architecture” and “emergent design” priesthood, please at least pause to consider these admonitions:

Give me six hours to chop down a tree and I will spend the first four sharpening the axe – Abe Lincoln

Measure twice, cut once – Unknown

If I had an hour to save the world, I would spend 59 minutes defining the problem and one minute finding solutions – Albert Einstein

100% test coverage is insufficient. 35% of the faults are missing logic paths – Robert Glass

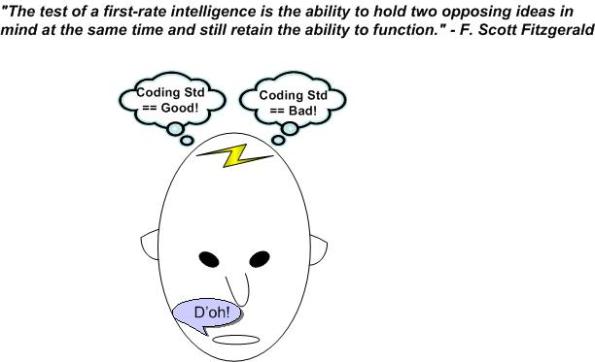

The Ability To Function

While writing the “Rule-Based Safety” post of a few days ago, this quote kept interfering with my thoughts:

Whenever I end up simultaneously holding two opposing ideas in my head, most of the time one of them automatically wins the battle quickly and boots out the loser. Phew, the victory relieves the mental tension. On the down-side, the winner is much too effective at preventing the opposition from ever entering the contemplation chamber again. I hate when that happens.

Rule-Based Safety

In this interesting 2006 slide deck, “C++ in safety-critical applications: the JSF++ coding standard“, Bjarne Stroustrup and Kevin Carroll provide the rationale for selecting C++ as the programming language for the JSF (Joint Strike Fighter) jet project:

First, on the language selection:

- “Did not want to translate OO design into language that does not support OO capabilities“.

- “Prospective engineers expressed very little interest in Ada. Ada tool chains were in decline.“

- “C++ satisfied language selection criteria as well as staffing concerns.“

They also articulated the design philosophy behind the set of rules as:

- “Provide “safer” alternatives to known “unsafe” facilities.”

- “Craft rule-set to specifically address undefined behavior.”

- “Ban features with behaviors that are not 100% predictable (from a performance perspective).”

Note that because of the last bullet, post-initialization dynamic memory allocation (using new/delete) and exception handling (using throw/try/catch) were verboten.

Interestingly, Bjarne and Kevin also flipped the coin and exposed the weaknesses of language subsetting:

What they didn’t discuss in the slide deck was whether the strengths of imposing a large coding standard on a development team outweigh the nasty weaknesses above. I suspect it was because the decision to impose a coding standard was already a done deal.

Much as we don’t want to admit it, it all comes down to economics. How much is the lowering of the risk of loss of life worth? No rule set can ever guarantee 100% safety. Like trying to move from 8 nines of availability to 9 nines, the financial and schedule costs in trying to achieve a Utopian “certainty” of safety start exploding exponentially. To add insult to injury, there is always tremendous business pressure to deliver ASAP and, thus, unconsciously cut corners like jettisoning corner-case system-level testing and fixing hundreds of “annoying” rules violations.

Does anyone have any data on whether imposing a strict coding standard actually increases the safety of a system? Better yet, is there any data that indicates imposing a standard actually decreases the safety of a system? I doubt that either of these questions can be answered with any unbiased data. We’ll just continue on auto-believing that the answer to the first question is yes because it’s supposed to be self-evident.

A Danger To Themselves And Others

“Efficient systems are dangerous to themselves and others” – John Gall

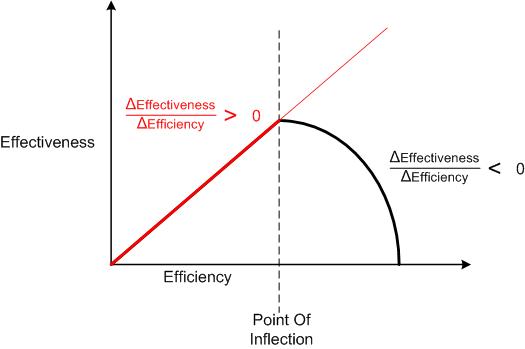

A new system is always established with the goal of outright solving, or at least mitigating, a newly perceived problem that can’t be addressed with an existing system. As long as the nature of the problem doesn’t change, continuously optimizing the system for increased efficiency also joyfully increases its effectiveness.

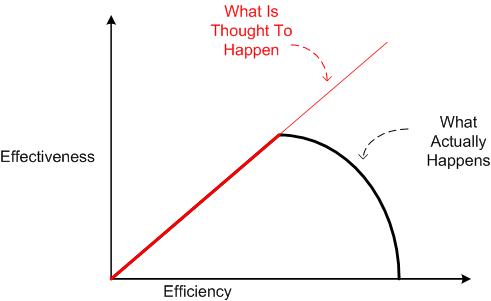

However, the universe being as it is, the nature of the problem is guaranteed to change and there comes a time where the joy starts morphing into sorrow. That’s because the more efficient a system becomes over time, the more rigid its structure and behavior becomes and the less open to change it becomes. And the more resistant to change it becomes, the more ineffective it becomes at achieving its original goal – which may no longer even be the right goal to strive for!

In the manic drive to make a system more efficient (so that more money can be made with less effort), it’s difficult to detect when the inevitable joy-to-sorrow inflection point manifests. Most managers, being cost-reduction obsessed, never see it coming – and never see that it has swooshed by. Instead of changing the structure and/or behavior of the system to fit the new reality, they continue to tweak the original structure and fine tune the existing behaviors of the system’s elements to minimize the delay from input to output. Then they are confounded when (if?) they detect the decreased effectiveness of their actions. D’oh! I hate when that happens.