Archive

Positive Or Negative, Meaning Or No Meaning?

In Claude Shannon‘s book, “The Mathematical Theory Of Communication“, Mr. Shannon positively correlates information with entropy:

Information = f(Entropy)

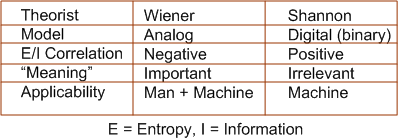

When I read that several years ago, it was unsettling. Even though I’m a layman, it didn’t make sense. After all, doesn’t information represent order and entropy represent its opposite, chaos? Shouldn’t a minus sign connect the two? Norbert Wiener, whom Claude bounced ideas off of (and vice-versa) thought it did. His entropy-information connection included the minus sign.

In addition, Shannon’s theory stripped “meaning”, which is person-specific and unmodel-able in scrutable equations, from information. He treats information as a string of bland ‘0’ and ‘1’ bits that get transported from one location to another via a matched, but insentient, transmitter-receiver pair. Wiener kept the “meaning” in information and he kept his feedback loop-centric equations analog. This enabled his cybernetic theory to remain applicable to both man and the machine and make assertions like: “those who can control the means of communication in a system will rule the roost“.

Like most of my posts, this one points nowhere. I just thought I’d share it because I think others might find the Shannon-Wiener differences/likenesses as interesting and mysterious as I do.