Archive

Asynchronous Flows And Synchronous Transactions

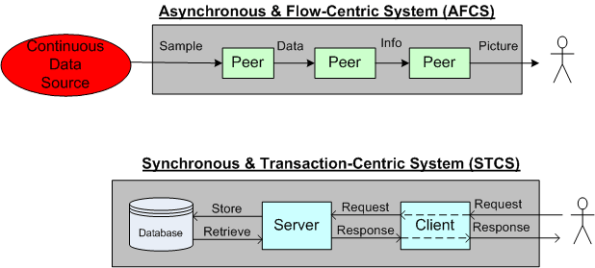

The figure below shows a pair of BD00 concocted models for two classes of systems; peer-to-peer and client-server:

The primary mission of an AFCS is to progressively transform a high rate stream of incoming raw samples into a higher level, abstract representation of some phenomena that’s important to its users. In an STCS, the system’s primary mission is to transform low rate user requests into information that’s important to its users.

In business support applications, STC systems dominate the scene. In aerospace and defense applications, AFC systems are king. Of course, the situation is never as simplistic as BD00 sez. Hybrid systems like the sensor-based command and control model below can be found everywhere.

For some reason (maybe market size and/or community culture and/or media exposure?), most software technology advancements (languages, patterns, methodologies, frameworks, etc) seem to emerge out of the STCS space. Those innovations that are “applicable” get adopted in the AFCS space. Hell, even those that are inapplicable (because they weren’t designed with performance as the top priority) get adopted.

A Hoarrific Failure

Work started (on the 503 Mark II software system) with a team of fifteen programmers and the deadline for delivery was set some eighteen months ahead in March 1965.

Although I was still managerially responsible for the 503 Mark II software, I gave it less attention than the company’s new products and almost failed to notice when the deadline for its delivery passed without event.

The programmers revised their implementation schedules and a new delivery date was set some three months ahead in June 1965. Needless to say, that day also passed without event.

I asked the senior programmers once again to draw up revised schedules, which again showed that the software could be delivered within another three months. I desperately wanted to believe it but I just could not. I disregarded the schedules and began to dig more deeply into the project.

The entire Elliott 503 Mark II software project had to be abandoned, and with it, over thirty man-years of programming effort, equivalent to nearly one man’s active working life, and I was responsible, both as designer and as manager, for wasting it.

The above story synopsis was extracted from Tony “Quicksort” Hoare‘s 1980-ACM Turing award lecture.

Mr. Hoare’s classic speech is the source of a few great quotes that have transcended time:

I conclude that there are two ways of constructing a software design: One way is to make it so simple that there are obviously no deficiencies and the other way is to make it so complicated that there are no obvious deficiencies. The first method is far more difficult…. No committee will ever do this until it is too late.

A feature which is included before it is fully understood can never be removed later.

At first I hoped that such a technically unsound project would collapse but I soon realized it was doomed to success.

The price of reliability is the pursuit of the utmost simplicity. It is a price which the

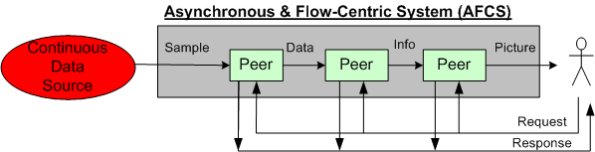

very rich find most hard to pay.The mistakes which have been made in the last twenty years are being repeated today on an even grander scale. (1980)

Dontcha think that last quote can be restated today as:

The mistakes which have been made in the last fifty years are being repeated today on an even grander scale.

Since man’s ability to cope with complexity is relentlessly being dwarfed by his propensity to create ever greater complexity, the same statement might probably be true 50 years hence, no?

A Friction-Based Separation Of Concerns

Alan Kay is one of the inventors of the Smalltalk programming language and a Turing award winner. During an interview with Dr. Dobbs’s Andrew Binstock, Mr. Kay had this to say:

American business is completely fucked up because it is all about competition. Our world was built for the good from cooperation. – Alan Kay

From the moment we stepped foot into the classroom and received our first gold star, we’ve been brainwashed with the BS idea that separation from, and competition with, other individuals is good and noble. In school, collaboration on assignments and tests is akin to “cheating“. In business, subjective reward systems pit team mates against each other for money and stature.

At least in school, everyone knows what the score is. There is no group purpose, vision, or mission; it’s all about individual achievement relative to other individuals. In business, so-called leaders constantly cry out for team work and collaboration while keeping idiotic policies/processes/procedures/structures in place that guarantee a friction-based separation of concerns between individuals and groups within the org. The reason this counterproductive “behavior” will continue unabated ad infinitum is because all the players involved in the game simply take it for granted. To “us” (yes, that includes you and me), it’s simply the way it’s always been and it’s simply the way it always should be. This thinking malady is so acute that not many people even try to search for alternatives. Those poor souls that do, are often ostracized into silence.

Annual Report – 2012

Fresh off the presses from the talented wordpress.com team, here are BD00’s vital blog stats for fiscal year 2012.

BD00’s sole proprietorship business met all of his lofty goals for 2012: zero revenues, zero expenses, and zero growth. The plan for 2013 calls for a 25% increase across the board. It’ll be tough, but optimal deployment of resources and flawless execution of strategy will guarantee success.

Boulders And Pebbles

When embarking on a Software Product Line (SPL) development, one of the first, far-reaching cost decisions to be tackled is the level of “granularity” of the component set. Obviously, you don’t want to develop one big, fat-ass, 5 million line monstrosity that has to have 1000s of lines changed/added/hacked for each customer “instantiation“. Gee, that’s probably how you operate now and why you’re tinkering with the idea of an SPL approach for the future.

On the other hand, you don’t want to build 1000s of 10K-line pieces that are a nightmare for composition, configuration, versioning and integration. For a given domain, there’s a “subjective” sweet spot somewhere between a behemoth 5M-line boulder and a basket of 10K-line pebbles. However, if you’re gonna err on one side or the other, err on the side of “bigger“:

…beware of overly fine-grained components, because too many components are hard to manage, especially when versioning rears its ugly head, hence “DLL hell.” – Martin Fowler (UML Distilled)

The primacy of system functions and system function groups allows a new member of the product line to be treated as the composition of a few dozen high-quality, high-confidence components that interact with each other in controlled, predictable ways as opposed to thousands of small units that must be regression tested with each new change. Assembly of large components without the need to retest at the lowest level of granularity for each new system is a critical key to making reuse work. – Brownsword/Clements (A Case Study In Successful Product Line Development)

We Must!

Time For A Toga Party!

Three Degrees Of Distribution

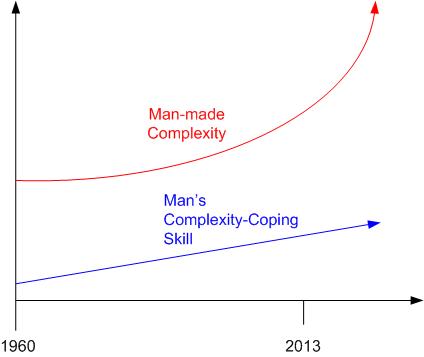

Behold the un-credentialed and un-esteemed BD00’s taxonomy of software-intensive system complexity:

How many “M”s does the system you’re working on have? If the answer is three, should it really be two? If the answer is two, should it really be one? How do you know what number of “M”s your system design should have? When tacking on another “M” to your system design because you “have to“, what newly emergent property is the largest complexity magnifier?

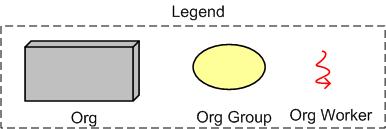

Now, replace the inorganic legend at the top of the page with the following organic one and contemplate how the complexity and “success” curves are affected:

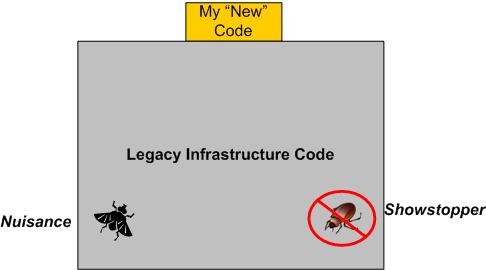

A Showstopper And A Nuisance

I recently spent 3.5 days hunting down and squashing a “showstopper” bug that ended up being a side effect of an earlier fix that I had made to eradicate a long-standing “nuisance” bug.

SIDEBAR: The nuisance bug occurred only during system shutdown. The system would crash on exit, but no data was lost or corrupted. It was long thought to be an “out of sequence” object destruction problem, but because hundreds of lines of nested destructor code are called during shutdown in multiple threads of execution and the customer never formally reported the bug (because the system is very rarely shutdown), it’s annihilation was put on the shelf – until I “fixed” it.

When I was initially told about the showstopper, I was confounded because the bug seemed to be located somewhere in the code of a simple feature that I had thought I tested pretty thoroughly. And yet, there it was, plainly obvious and easily reproducible – a crash that happens during runtime whenever a specific sequence of operator actions are performed. WTF?

With the system model below embedded in my genius mind, I thought the bug HAD to be located somewhere in the massive, preexisting, 150K line legacy code base. After all, the number of code lines I added to the beast in order to implement the feature was so small and unassuming that the odds favored my hypothesis.

Even though my hypothesis was that the new code I added had uncovered and triggered some other dormant bug deep in the bowels of the software, I first inspected the measly few lines I recently added to the code base. Of course, the inspection yielded no “aha, there it is” moment. Bummer.

Next, I fired up the debugger, sprinkled a bunch of breakpoints throughout the code, and stepped through my brilliant and elegantly simple code. I found that when the control of execution descended into the netherworld below my impeccable work, the bug came out of hiding – crash! However, since a bunch of event driven callbacks were triggered each time the execution of control left my code, I couldn’t trace the execution path so easily.

Exasperated that the debugger didn’t tell me exactly where and what the freakin’ bug was, I started reading and reverse engineering (via targeted UML class and sequence diagram sketches) segments of the legacy code. Since it was my first focused foray down into the dungeon, it was a slow going, but beneficial, learning experience.

Finally, after a couple of days of inspection, reverse engineering, and a bazillion debugger runs, I stumbled upon a note written by yours truly in one of the infrastructure callback functions:

“The line of code below was commented out because it triggers a crash on shutdown“.

Bingo, a light went off! Quickly, I uncommented out the line of code and reran the program. Yepp, the bug was gone! As I initially thought, the critter did turn out to be living within the infrastructure, but I had unwittingly put it there a while ago in order to kill the long-standing “nuisance” shutdown bug. Ain’t life grand?

Of course, the tradeoff for re-enabling the line of code that killed the nasty bug is that the nuisance bug is alive and well again. And no, unless I’m directly ordered to, I ain’t gonna go uh huntin’ fer it aginn. No good deed goes unpunished.

Defect Density

ln “Software’s Hidden Clockwork: A General Theory of Software Defects“, Les Hatton presents these two interesting charts:

The thing I find hard to believe is that Les has concluded that there is no obvious significant relationship between defect density and the choice of programming language. But notice that he doesn’t seem to have any data points on his first chart for the relatively newer, less “tricky“, and easier-to-program languages like Java, C#, Ruby, Python, et al.

So, do you think Les might have jumped the gun here by prematurely asserting the virtual independence of defect density on programming language?