Archive

Design, And THEN Development

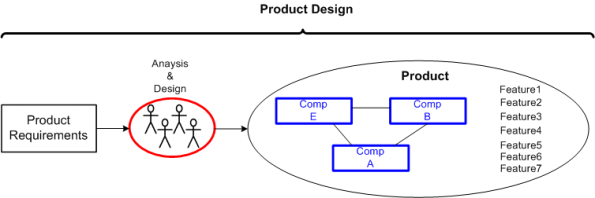

First, we have product design:

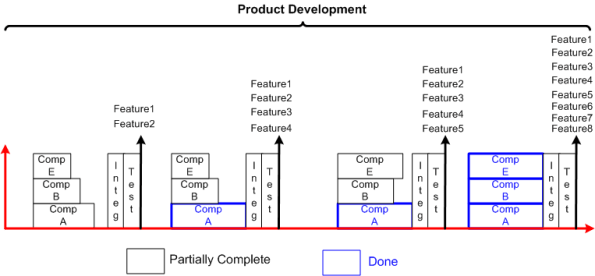

Second, we have product development:

At scale, it’s consciously planned design first, and development second: design driven development. It’s not the other way around, development driven design.

The process must match the product, not the other way around. Given a defined process, whether it’s agile-derived or “traditional“, attempting to jam fit a product development effort into a “fits all” process is a recipe for failure. Err, maybe not?

The Efficient, The Troubled, The Dysfunctional, The Apocalyptic

Crash!!!!

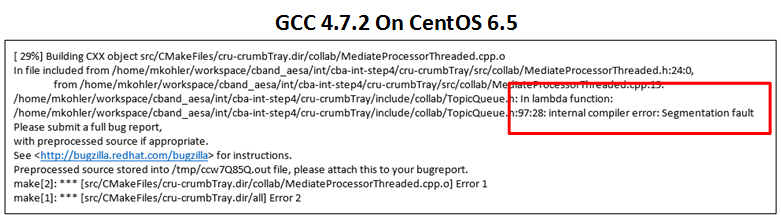

Holy crap! I can’t remember the last time I crashed a GCC C++ compiler. But looky here at this seg fault:

Well, I didn’t cause the crash, a colleague did. When was the last time you crashed a compiler?

RaiiGuard

In my application domain, the following shared object pattern occurs quite frequently:

Typically, Shared is a small, dynamically changing, in-memory, database and two or more threads need to perform asynchronous CRUD operations on its content. I’ve seen, and have written, code like this more times than I can count:

Shared so{};

//The following idiom is exception-unsafe

so.lock();

so.doStuff(); //doStuff() better not throw!

so.unlock();

The problem with the code is that if doStuff() (or any other code invoked within its implementation) throws an exception, then unlock() won’t get executed. Thus, any other thread that attempts to access so by calling so.lock() will get blocked… forever… deadlock city.

Here is one way of achieving exception safety, but it’s clunky:

Shared so{};

//Clunky way to achieve exception safety

so.lock();

try {

so.doStuff(); //doStuff() can throw!

}

catch(...) {

so.unlock();

}

so.unlock();

I use Stroustrup’s classic RAII (Resource Acquisition Is Initialization) technique to achieve exception safety:

Shared so{};

RaiiGuard<Shared> guardedSo{so};

guardedSo->doStuff(); //It's OK for doStuff() to throw!

Since the solution involves constructing/destructing a RaiiGuard<Shared> object in the same scope as the Shared object it encapsulates and protects from data races, the tradeoff is an exception-safety gain for a bit of performance loss.

Here is the simple RaiiGuard class template that I use to achieve the non-clunky way to exception safety:

template<typename T>

class RaiiGuard {

public:

explicit RaiiGuard(T& obj) : _obj(obj) {

_obj.lock();

}

T* operator->() {

return &_obj;

}

~RaiiGuard() {

_obj.unlock();

}

private:

T& _obj;

};

It locks access to an object of type T on construction and unlocks it on destruction – the definition of RAII and RRID (Resource Release Is Destruction). The user obtains access to the “monitored” underlying object by invoking T* RaiiGuard<T>::operator->().

The only requirement imposed on the template type T is that it implements the lock() and unlock() functions as follows:

class T {

public:

void lock() {

m.lock();

}

void unlock() {

m.unlock();

}

void doStuff() {

}

private:

mutable std::mutex m;

};

Since they all contain lock()/unlock() pairs, any of the STL mutex types can be substituted for std::mutex.

How do you achieve exception safety for the shared object pattern?

All Tucked In

My wookie is all tucked in and fast asleep…

As for me, in a few short hours I’ll be on my way to Nawlins’ for this year’s “trip of a lifetime“. It’s Mardi Gras time and I’m all swagged up!!!!!

It’s Hexed

Prior to the release of C++11 by the ISO WG-21 committee, I followed the development of the standard very closely for several years before it was finally released in 2011. The painfully slow-moving effort to update the language was dubbed “C++0x” because no one knew what year (if any!) the standard would be ratified. Since it wasn’t labeled “C++xx“, everyone was hoping that the year of publication would be 2009 at the latest: “C++09“.

At the time, I stumbled across, and purchased a polo shirt with this comical logo embroidered on the breast:

I can’t remember the year in which I bought the shirt, but it’s obviously at least 6 years old. I still wear it today. Someday, it may be worth a bunch of bitcoins. LOL.

Gorillas In Our Midst

“Too Big Too Fail” pushed us to the edge of the abyss in 2008. So how could our so-called government leaders let this happen….

I think I know how it was done: legal bribery of government officials (aka “lobbyists“) and the cozy, revolving door, relationship between Wall St. and the US treasury.

Why is Bernie Sanders the only US presidential candidate who even mentions breaking up these four gorillas in our midst?

Become An Instant Trillionaire!

For only $39.95 USD plus tax, you can become a trillionaire in Zimbabwe. Woot! The downside is that your trillion dollars won’t even be enough to buy a loaf of bread.

Since central bankers and free spending governments have been stoking the flame of inflation forever, people have been hoodwinked into thinking that inflation is, like gravity, a force we must live with.

In an inflationary environment, people, knowing their hard earned money will lose value over time (minute by minute in a hyper-inflationary apocalypse), are motivated to spend and borrow as soon as possible. Inflation works just like compound interest does – but in reverse. It doesn’t make economic sense to save for the future when you know that the $1 you earned today will be worth much less in 5-10-20-30 years.

Everyone knows that rampant inflation can quickly destroy a society, but to most textbook economists a “little” inflation is required to keep an economy growing by catalyzing buying, borrowing, and investing behaviors. But, how much is a “little” inflation?

With deflation, your money doesn’t just retain its value over time, its purchasing power increases. In contrast to a little inflation, classical economists theorize that any level of deflation is bad for economies because it encourages people to save their money (they call it “hoarding” to give it a negative connotation) and discourages investment in the future.

If deflation is so bad, why has it has worked so splendidly in the computer industry? Relentless price decreases for computer hardware over time has not stopped companies from competing with each other, or investing in new products, or innovating for the future. Deflation hasn’t stopped people from buying a $1000 computer today that they know will cost $500 next year.

Unlike all fiat currencies backed by “the full faith and credit” of untrustworthy governments, bitcoins are deflationary. The Bitcoin protocol is designed to dispassionately stop the minting presses when 21 million bitcoins have been created and issued. If you own some bitcoins and the Bitcoin economy manages to survive the mighty external AND internals forces vying to tear it apart, you’ll be a happy camper some time down the road.

At This Juncture…

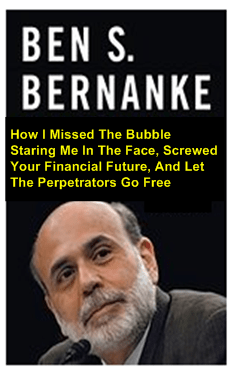

Former US federal reserve chairman Ben Bernanke recently wrote this tribute to self:

As the chairman of the Fed during the 2008 financial meltdown, Mr. Bernanke stated the following sentence just prior to the calamity that destroyed the financial well-being of thousands of people whilst leaving the super rich bankstas responsible for the fiasco unscathed:

At this juncture, however, the impact on the broader economy and financial markets of the problem in the subprime market seems likely to be contained – Ben Bernanke, Testimony before the Joint Economic Commission March 28, 2007

Maybe the book should have been truthfully titled:

Ready And Waiting

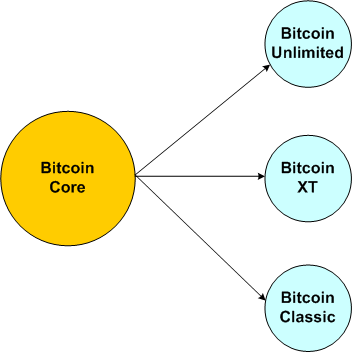

Because of the steadfast stubbornness of the Bitcoin Core software development team to refuse to raise the protocol’s maximum block size in a timely fashion, three software forks are ready and waiting in the wings to save the day if the Bitcoin network starts to become overly unreliable due to continuous growth in usage.

So, why would the Bitcoin Core team drag its feet for 2+ years on solving the seemingly simple maximum block issue? Perhaps it’s because some key members of the development team, most notably Greg Maxwell, are paid by a commercial company whose mission is to profit from championing side-chain technology: Blockstream.com. Greg Maxwell is a Blockstream co-founder.

I don’t know the deployment status of Bitcoin Unlimited or Bitcoin Classic, but I do know that a number of key Bitcoin community players experimented with running the Bitcoin XT software (authored by long-time, dedicated Bitcoiner Gavin Andresen and former Bitcoiner Mike Hearn). The Bitcoin Core team was so offended by the transgression that either they and/or their followers executed a series of DDoS attacks on XT nodes to punish the offenders and coerce the infidels to revert back to running the Bitcoin Core code.

Since the date on which Bitcoin XT’s increased maximum block size functionality was supposed to kick in has expired, some people have said that Bitcoin XT has outright failed. However, BD00 thinks that Bitcoin XT and/or its siblings will be adopted quicker than you can say “Blockstream” if the Bitcoin network becomes systemically unusable under a frozen Bitcoin Core code base. After all, there is at least $6 billion dollars of market value and $1 billion dollars of investment capital staked on the success of Bitcoin. So if push comes to shove, Bitcoin Core just might get shoved out into the cold by the rest of the community (users, miners, exchanges).