Is It Safe?

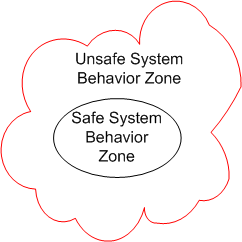

Assume that we place a large, socio-technical system designed to perform some safety-critical mission into operation. During the performance of its mission, the system’s behavior at any point in time (which emerges from the interactions between its people and machines), can be partitioned into 2 zones: safe and unsafe.

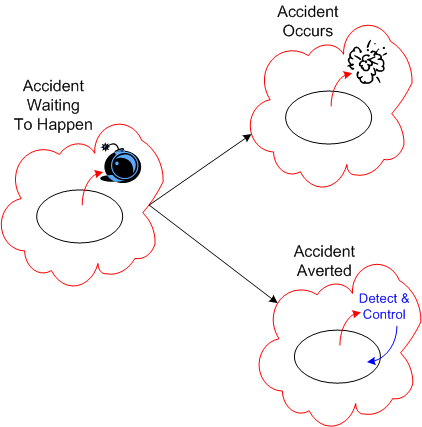

Since the second law of dynamics, like all natural laws, is impersonal and unapologetic, the system’s behavior will eventually drift into the unsafe system behavior zone over time. Combine the effects of the second law with poor stewardship on behalf of the system’s owner(s)/designer(s), and the frequency of trips into scary-land will increase.

Just because the system’s behavior veers off into the unsafe behavior zone doesn’t guarantee that an “unacceptable” accident will occur. If mechanisms to detect and correct unsafe behaviors are baked into the system design at the outset, the risk of an accident occurring can be lowered substantially.

Alas, “safety“, like all the other important, non-functional attributes of a system, is often neglected during system design. It’s unglamorous, it costs money, it adds design/development/test time to the sacred schedule. and the people who “do safety” are under-respected. The irony here is that after-the-accident, bolt-on, safety detection and control kludges cost more and are less trustworthy than doing it right in the first place.