Unavailability II

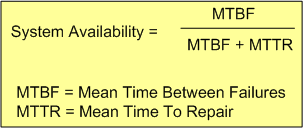

The availability of a software-intensive, electro-mechanical system can be expressed in equation form as:

With the complexity of modern day (and future) systems growing exponentially, calculating and measuring the MTBF of an aggregate of hardware and (especially) software components is virtually impossible.

Theoretically, the MTBF for an aggregate of hardware parts can be predicted if the MTBF of each individual part and how the parts are wired together, are “known“. However, the derivation becomes untenable as the number of parts skyrockets. On the software side, it’s patently impossible to predict, via mathematical derivation, the MTBF of each “part“. Who knows how many bugs are ready to rear their fugly heads during runtime?

From the availability equation, it can be seen that as MTTR goes to zero, system availability goes to 100%. Thus, minimizing the MTTR, which can be measured relatively easily compared to the MTBF ogre, is one effective strategy for increasing system availability.

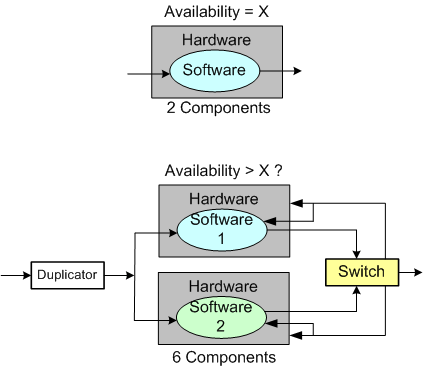

The “Time To Repair” is simply equal to the “Time To Detect A Failure” + “The Time To Recover From The Failure“. But to detect a failure, some sort of “smart” monitoring device (with its own MTBF value) must be added to the system – increasing the system’s complexity further. By smart, I mean that it has to have built-in knowledge of what all the system-specific failure states are. It also has to continually sample system internals and externals in order to evaluate whether the system has entered one of those failures states. Lastly, upon detection of a failure, it has to either inform an external agent (like a human being) of the failure, or somehow automatically repair the failure itself by quickly switching in a “good” redundant part(s) for the failed part(s). Piece of cake, no?