Centralized, Federated, Decentralized

1 Prelude

A colleague on LinkedIn.com pointed me toward this Doug Schmidt, et al, paper: “Evaluating Technologies for Tactical Information Management in Net-Centric Systems“. In it, Doug and crew qualitatively (scalability, availability, configurability) and quantitatively (latency, jitter) evaluate three different architectural implementations of the Object Management Group‘s (OMG) Data Distribution Service (DDS): centralized, federated, and decentralized.

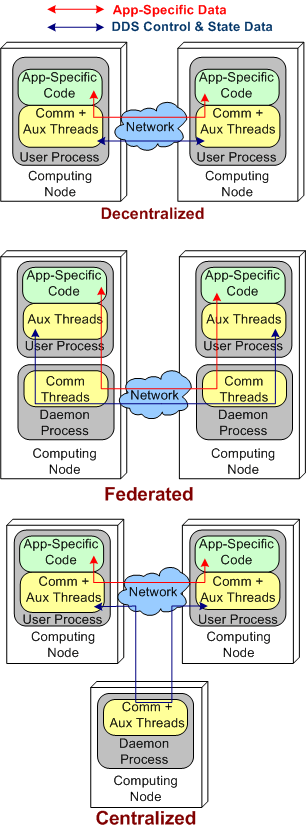

The stacked trio of figures below model the three DDS architecture types. They’re slightly enhanced renderings of the sketches in the paper.

2 Quantitative Comparisons

DDS was specifically designed to meet the demanding latency and jitter (the standard deviation of latency) performance attributes that are characteristic of streaming, Distributed Real-Time Event (DRE) systems like defense and air traffic control radars. Unlike most client-server, request-response systems, if data required for human or computer decision-making is not made available in a timely fashion, people could die. It’s as simple and potentially horrible as that.

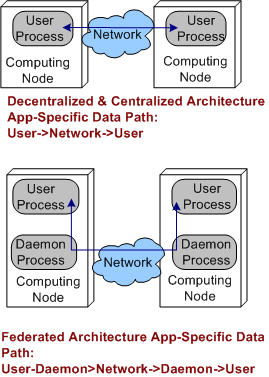

Applying the systems thinking idiom of “purposeful, selective ignorance“, the pics below abstract away the unimportant details of the pics above so that the architecture types can be compared in terms of latency and jitter performance.

By inspecting the figures, it’s a no brainer, right? The steady-state latency and jitter performance of the decentralized and centralized architectures should exceed that of the federated architecture. There is no “middleman“, a daemon, for application layer data messages to pass through.

Sure enough, on their two node test fixture (I don’t know why they even bothered with the one node fixture since that really isn’t a “distributed” system in my mind) , the Schmidt et al measurements indicate that the latency/jitter performance of the decentralized and centralized architectures exceed that of the federated architecture. The performance difference that they measured was on the order of 2X.

3 Qualitative Comparisons

In all distributed systems, both DRE and Client-Server types, achieving high operational availability is a huge challenge. Hell, when the system goes bust, fuggedaboud the timeliness of the data, no freakin’ work can get done and panic can and usually does set in. D’oh!

With that scary aspect in mind, let’s look at each of the three architectures in terms of their ability to withstand faults.

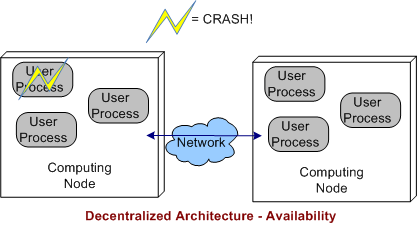

3.1 Decentralized Architecture Availability

In a decentralized architecture, there are no invasive daemons that “leak” into the application plane so we can’t talk about daemon crashes. Thus, right off the bat we can “arguably” say that a decentralized architecture is more resistant to faults than the federated or centralized architectures.

As the picture below shows, when a user application layer process dies, the others can continue to communicate with each other. Depending on what the specific application is required to do during operation, at least some work may be able to still get accomplished even though one or more app components go kaput.

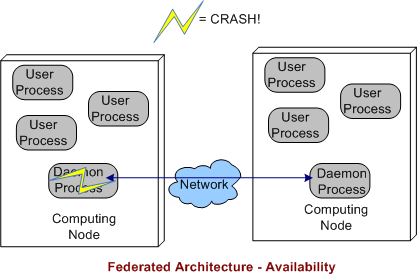

3.2 Federated Architecture Availability

In a federated architecture, when a daemon process dies, a whole node and all the subscriber application user processes running on it are severed from communicating with the user processes running on the other nodes (see the sketch below) in the system. Thus, the federated architecture is “arguably” less fault tolerant than the decentralized and (as we’ll see) centralized architectures. However, through judicious “allocation” of user processes to nodes (the fewer the better – which sort of defeats the purpose of choosing a federation for per node intra-communication performance optimization), some work still may be able to get accomplished when a node’s daemon crashes.

If the node daemons stay viable but a user application dies, then the behavior of a federated architecture, DDS-based system is the same as that of a decentralized architecture

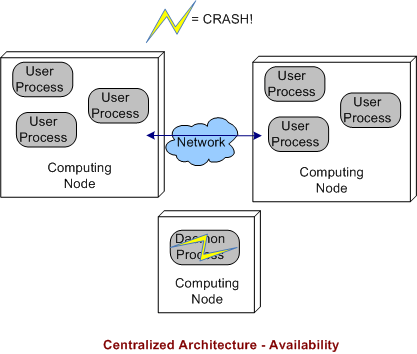

3.3 Centralized Architecture Availability

Finally, we come to the robustness of a centralized DDS architecture. As shown below, since the single daemon overlord in the system is not (or should not be) involved in inter-process application layer data communications, if it crashes, then the system can continue to do its full workload. When a user process crashes instead of, or in addition to, the daemon, then the system’s behavior is the same as a decentralized architecture.

4 BD00 Commentary

Because he works on data streaming DRE radar systems, Jimmy likes, I mean BD00 likes, the DDS pub-sub architectural style over broker-based, distributed communication technologies like C/S CORBA and JMS queues. It should be obvious that the latter technologies are not a good match for high availability and low latency DRE applications. Thus, trying to jam fit a new DRE application into a CORBA or JMS communication platform “just because we have one” is a dumb-ass thing to do and is sure to lead to high downstream maintenance costs and a quicker route to archeosclerosis.

Within the DDS space, BD00 prefers the decentralized architecture over the federated and centralized styles because of the semi-objective conclusions arrived at and documented in this post.